Clock synchronization is a critical challenge in distributed systems like CockroachDB. While atomic clocks and GPS-based hardware provide nanosecond-level precision, they introduce unnecessary complexity and cost barriers for typical deployments. CockroachDB takes a pragmatic approach, leveraging the Network Time Protocol (NTP) along with software-based compensation techniques to achieve reliable time coordination across database nodes.

The Importance of Good Timekeeping

For further background, please visit the Cockroach Labs blog, “Living without atomic clocks: Where CockroachDB and Spanner diverge.” It provides good insights into the role of clocks in CockroachDB operations, and why a tight synchronization of clocks of all VMs in the CockroachDB cluster is extremely important.

This article provides actionable clock management guidance to operators of CockroachDB in single-region, homogeneous multi-region and multi-cloud environments.

Impact of Clock Synchronization Precision

Clock skew between CockroachDB cluster VMs can impact SQL workload response times. Generally, larger skews degrade query performance.

CockroachDB relies on the clocks of all of a CockroachDB cluster’s VMs to stay within a configurable maximum offset from each other. This is necessary to provide ACID guarantees, specifically non-stale reads, i.e. reads that do not return the latest committed value.

In CockroachDB, every transaction starts and commits at a timestamp assigned by some CockroachDB node. When choosing this timestamp the node does not rely on any particular synchronization with any other CockroachDB node. This becomes the timestamp of each tuple written by the transaction.

CockroachDB uses multi-version concurrency control (MVCC) – it stores multiple values for each tuple ordered by timestamp. A reader generally returns the latest tuple with a timestamp earlier than the reader's timestamp and ignores tuples with higher timestamps. This is when clock skew needs to be considered.

If a reader's timestamp is assigned by a CockroachDB node with a clock that is behind, it might encounter tuples with higher timestamps that are, nevertheless, in the reader's “past", i.e. they were committed before the reader's transaction but their timestamps were assigned by a clock that is ahead. This problem is solved with the max-offset. Each reading transaction has an uncertainty interval, which is the time between the reader’s timestamp and the reader’s timestamp plus the max-offset.

Tuples with timestamps above the reader’s but within the max-offset are considered to be ambiguous as to whether they're in the past or the future of the reader. Encountering such an uncertain value can lead to the reader transaction having to restart at a higher timestamp, so that this time all the previously uncertain values are definitely in the past. There are various optimizations for limiting this uncertainty, yet generally clock skew can lead to poor performance because it requires transactions to restart.

Maximum Offset Value and its Impact on Performance

The default maximum clock offset value is 500 ms. CockroachDB enforces the maximum offset with a specially designed clock skew detection mechanism built into the intra node communication protocol. CockroachDB nodes periodically exchange clock signals and computing offsets. If a CockroachDB node detects a drift of over 80% of the maximum offset (e.g. 400 ms, assuming the default maximum offset value) vs. half of other nodes, it spontaneously shuts down the node to guarantee database read consistency.

In principle, an increase in the maximum offset may have an adverse effect on user workload performance as it increases the probability of uncertainty retries. In most cases, retries are handled automatically on the server side, yet in some cases the application may receive retry errors and the transaction must be retried on the client side. A decrease generally has a positive impact.

👉 If the current Cockroach Labs clock management guidance is followed, it is generally safe to reduce the maximum offset to 250 ms from its default value. This default can be explicitly overwritten with the --max-offset flag in the node start command.

It is impossible to quantify or predict the exact performance impact as it depends on the specific SQL workload and user-driven concurrency. The impact may range from non-measurable to dramatic.

The worst-case scenario is when clocks are in fact well synchronized and the workload is concurrent with long-running transactions. This occurs when transactions are multi-statement, have long running selects with large scans and produce large result sets.

When a read from one of the long-running transactions encounters a write with a higher timestamp but within the max offset, i.e. the uncertainty window, it will generally be forced to restart.

With a larger uncertainty window the probability of contention is higher. If retries are observed, for example, with a 250 ms maximum offset, a user should expect more retries with a setting of 500 ms.

As noted earlier, the size of the result set impacts the probability of retries. If an application client implements single statement transactions with a small result set, the server will auto-retry and the client will experience a relatively limited increase in the response time. Yet transactions with large result sets may “spill” the result set records to the client several times during the transaction, which would inevitably increase the probability of a retry and also turn it into a more costly client side retry, since server side auto-retry is only possible before the client gets any part of the result.

Time Smoothing for Leap Second Handling

For this next section, I’m assuming that you’re familiar with leap second clock adjustments. In this article, the term clock "jump" is synonymous to the NTP technical term "step".

Timekeeping practiced by different government and business entities can take one of the two forms when it comes to a clock "jump" during a leap second adjustment:

1. Monotonic time – Instead of stepping back during a leap second, time will be slowed leading up to the leap second in such a way that time will neither jump nor move backwards. Disadvantage: The time leading up to/following the leap second will not accurately reflect the real time.

2. Accurate time – This can mean a large one-second clock jump, for example for leap second adjustment, and possibly going back in time, if the local clock is ahead of reference time.

In both cases the data consistency/correctness will be preserved by CockroachDB's internal synthetic HLC clock. However the operators need to be concerned about two often undesirable realities associated with accurate timekeeping (option Two):

- If a clock jump results in a large clock offset between nodes, CockroachDB nodes may spontaneously exit to protect ACID guarantees, causing unnecessary commotion in the cluster.

- The customer's applications may exhibit unexpected behavior if they read the local Linux clock or use SQL time functions like `now()`.

👉 Cockroach Labs has a strong preference for option One - monotonic time. The priority is for time to be smooth and coordinated across nodes rather than allowing a synchronized jump with UTC.

Time smoothing of the leap second can be implemented using NTP's slew or smear. Either is acceptable to CockroachDB while a large step is undesirable. Continue to NTP Sources for platform-specific configuration guidance.

Time Continuity during Live CockroachDB VM Migrations (Memory Preserving Maintenance)

Live VM migration is an infrastructure level method of enabling the platform's operational continuity. It moves a VM running on one physical hardware server to another server without a VM reboot.

Live VM migrations are technically supported by all hypervisors that can be used to run CockroachDB clusters (albeit not all providers, notably AWS, enable them for their users) :

- [KVM] (AWS and GCP public clouds)

- [Hyper-V] (Azure public cloud)

- [ESXi] (VMware vSphere private cloud)

Uninterrupted Clock Prerequisite Requirement

The key technical requirement for allowing CockroachDB VMs to migrate live without a service disruption is guest VM clock continuity:

✔️ Problem: Guest clock continuity can't be guaranteed during a live VM migration.

Migration starts with an iterative pre-copy of the VM's memory. Then the VM is suspended, the last dirty pages are copied, and the VM is resumed at its destination. The downtime can range from a few milliseconds to seconds.

While a VM is suspended, its clock does not run. The moment a CockroachDB VM is resumed at its destination, its clock is behind by the amount of suspension time, i.e. it's arbitrarily stale. Therefore a SELECT statement starting immediately after a migration can be assigned a stale timestamp. In other words, the database cannot guarantee consistency (“C” in ACID) during a live migration.

✅ Solution: If live CockroachDB VM migration is required, all CockroachDB nodes must be configured to use the clock of the hardware host via a precision clock (PTP) device. The hardware clock is continuously available through the entire live migration cycle. CockroachDB nodes can be configured to use the hardware clock with the --clock-device flag in the node start command.

Access to the hardware clock via PTP device is currently supported by VMware vSphere, AWS EC2, and Azure. However, neither AWS EC2 nor Azure hardware clock meet time smoothing requirement and consequently may not be used with CockroachDB.

Live VM migration can support two operational tasks - (1) runtime VM load optimization (e.g. VMware DRS) and (2) VMs' memory-preserving maintenance of the underlying hardware servers.

Cockroach Labs advises against enabling runtime VM load optimization whenever the CockroachDB operator has control over this configuration option. Best practices guidance is available for memory-preserving maintenance of CockroachDB VMs in cloud platforms that meet pre-requisite requirements.

Clock Configuration Guidance

NTP Client Side Configuration

Linux configuration guidance in this section applies to CockroachDB guest VMs and hardware servers running CockroachDB software.

✅ TLDR; Cockroach Labs recommends chrony as the NTP client on CockroachDB VMs/servers.

There are three commonly used NTP clients available on Linux - timesyncd, ntpd, and chronyd.

Chronyhas many advantages over other NTP clients and is the only NTP client recommended by Cockroach Labs.Chronyis the default NTP user space daemon in Red Hat 7 and onwards.Chronyis the recommended optional client by Ubuntu. CockroachDB platform health checks conducted by Cockroach Labs will flag non-crony NTP clients.Ntpdis now deprecated and should not be used in new CockroachDB installations. Starting with Red Hat 8 ntpd is no longer available. By default,ntpdis no longer installed in Ubuntu.Ntpdmay, however, persist through Linux upgrades. Cockroach Labs encourages customers to plan to replace legacyntpdclients withchronyto maintain a good platform configuration hygiene.Timesyncdis simplistic and cannot reliably ensure the required precision in multi-regional topologies.Timesyncdis the default NTP client in Ubuntu. Ubuntu's posture is "timesyncdwill generally keep your time in sync, andchronywill help with more complex cases."

✅ Cockroach Labs defers the NTP client configuration decision to the underlying platform vendors when CockroachDB is operated over a managed Kubernetes service, such as AWS EKS, GCP GKE, Azure AKS.

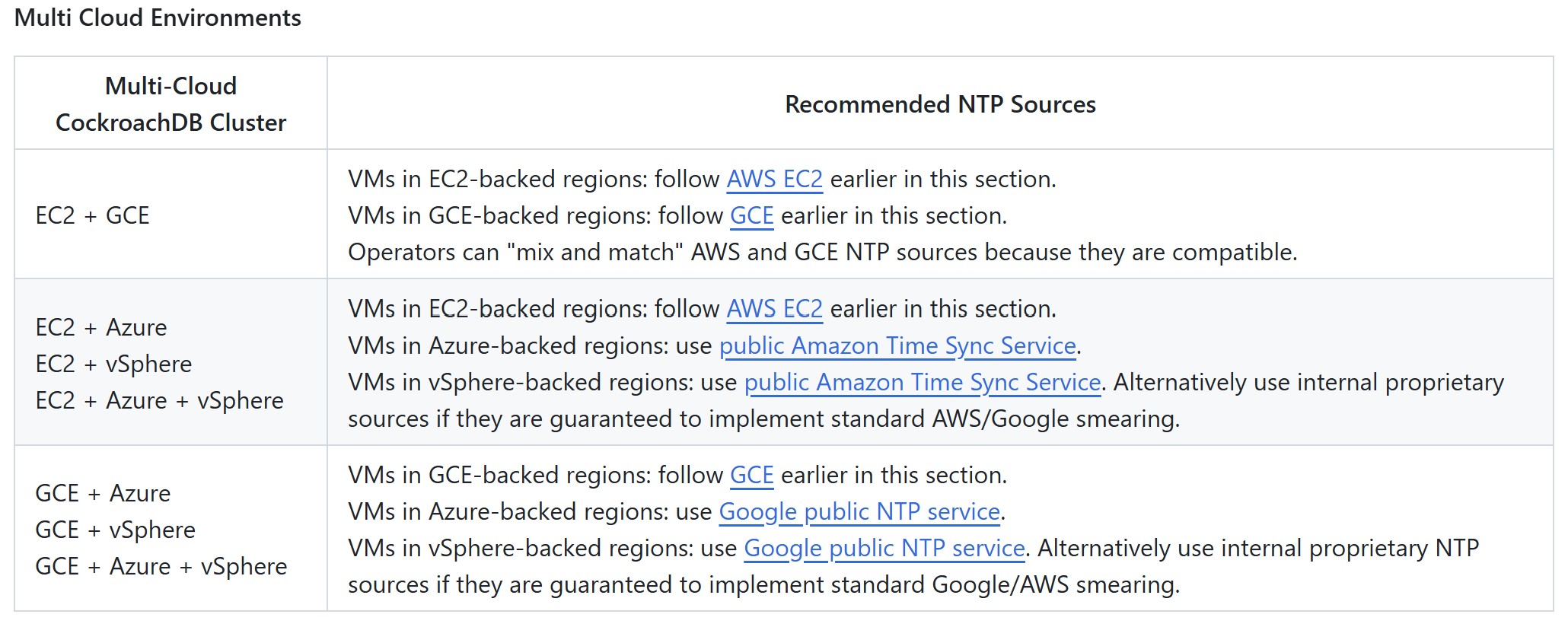

NTP Sources

Linux configuration guidance in this section applies to CockroachDB guest VMs and hardware servers running CockroachDB software.

A Network Time Protocol (NTP) server is a computer system that acts as a reliable source of time to synchronize NTP clients. In this article, the term clock "NTP server" is synonymous to "NTP source."

Configure the NTP clients on CockroachDB VMs to synchronize against NTP sources that meet the following best practices:

Choose a geographically local source with the minimum synchronization distance (network roundtrip delay). This will improve resiliency by reducing the probability of network disruption to a source. For muti-region and multi-cloud topologies it means VMs in each region should synchronize against regional NTP sources, as long as regional sources comply with Cockroach Labs best practices guidance.

Only use NTP sources that implement smearing or slewing of leap second.

If memory preserving maintenance is required, configure NTP sources with uninterrupted time.

Configure NTP sources with redundancy. At least three NTP servers is a common IT practice for configuring each NTP client.

NTP configurations of all CockroachDB VMs in the same region must be identical.

AWS EC2

✅ Configure all in-region EC2 CockroachDB VMs to access the local Amazon Time Sync Service via 169.254.169.123 IP address endpoint. This is usually the default EC2 Linux VM configuration. For a backup, or to synchronize CockroachDB VMs outside of AWS EC2, use the public Amazon Time Sync Service via time.aws.com. The local and public AWS sources automatically smear UTC leap seconds. AWS leap smear is algorithmically identical to GCP leap smear.

👉 Avoid using the EC2 PTP hardware clock because it does not smear time, i.e. does not meet the time smoothing requirement.

GCE

✅ Configure all in-region GCE CockroachDB VMs to access the internal GCE NTP Service via metadata.google.internal endpoint. This is usually the default GCE Linux VM configuration. For a backup, or to synchronize CockroachDB VMs outside of GCE, use Google public NTP service via time.google.com. The local and public GCE sources automatically smear UTC leap seconds. GCP leap smear is algorithmically identical to AWS leap smear.

Azure

✅ Azure infrastructure is backed by Windows Server that provide guest VMs with accurate time. I.e. the native Azure-provided Time sync service for Linux VMs does not smear or slew leap second. Configure all in-region Azure CockroachDB VMs to synchronize either to Google public NTP service via time.google.com or to public Amazon Time Sync Service via time.aws.com. Either service is acceptable as primary. Configure the other one as a backup. All CockroachDB VMs in the same region must have an identical NTP configuration.

VMware VSphere

✅ The CockroachDB on VMware vSphere white paper provides clock configuration best practices for guest VMs and ESXi servers.

(click to enlarge)

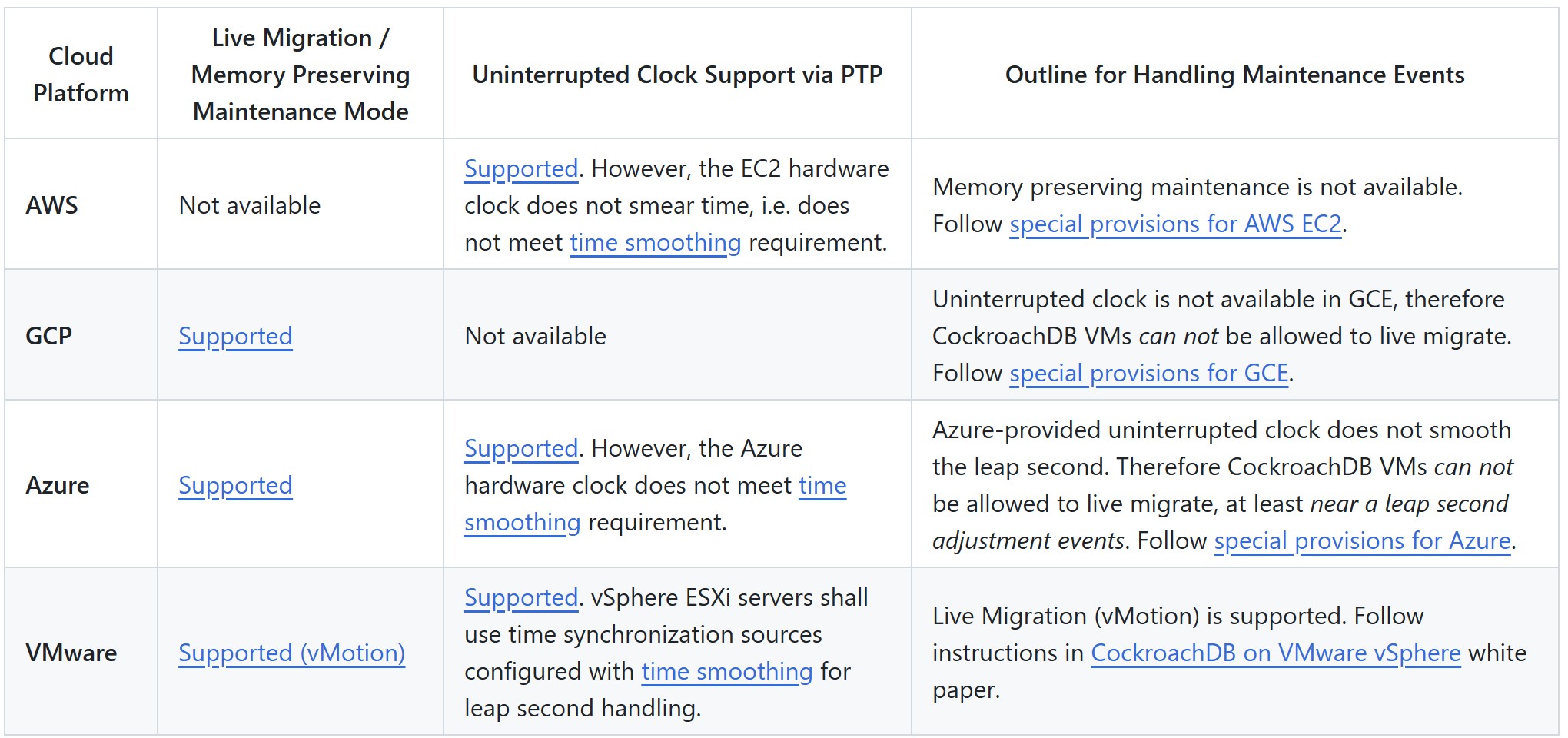

Configuring CockroachDB for Planned Cloud Maintenance

A maintenance event refers to a planned operational activity that requires guest VMs to be moved out of the host server.

All public and private cloud services have a required planned maintenance. CockroachDB operators should develop an operational procedure to handle planned maintenance events. In a multi-cloud deployment, a custom procedure is required for each cloud platform.

👉 Relying on default/out-of-the-box platform behavior during a maintenance event is generally unacceptable as it's likely to be disruptive to CockroachDB cluster VMs. A custom procedure or configuration adjustments are usually required for public and private cloud platforms.

Q: What are the potential ramifications of allowing live migrations of CockroachDB VMs without configuring an uninterrupted clock?

A: Without a clock continuity guarantee, the database will be denied the means to guarantee transactional consistency and the applications may be served a stale read without any indication of that happening. Since a probability of a compromised consistency exists, however small, it is not acceptable for applications using CockroachDB as a system of records.

Here is a summary of recommendations for handling maintenance events, by cloud platform:

(click to enlarge)

AWS EC2 Special Provisions

✅ Perform a proactive rolling (one-at-a-time) CockroachDB nodes restart, stopping-and-starting or rebooting each CockroachDB VM scheduled for maintenance, depending on the root volume being EBS or instance store. This procedure must be executed during the scheduled maintenance event window, upon receiving a scheduled maintenance event notification. Operators can create custom maintenance event windows to avoid maintenance during peak database use periods. A stop-and-start or reboot of a VM scheduled for maintenance will relocate it to a new underlying hardware host.

GCE Special Provisions

👉 By default, the GCE VM types that run CockroachDB nodes are set to live migrate during maintenance. However, uninterrupted clock is not available to CockroachDB VMs in GCE. Therefore operators must disable live migrations for all CockroachDB VMs by explicitly setting the host maintenance policy from MIGRATE to TERMINATE. CRL recommends disabling live migrations during each CockroachDB VM instance creation. If that's not possible, disable live migrations by updating the host maintenance policy of all existing CockroachDB VM instances.

✅ CockroachDB operators are advised to monitor the upcoming maintenance notifications and manually trigger CockroachDB VM maintenance after orderly stopping CockroachDB node and ensuring only one node is down for maintenance at a time.

Azure Special Provisions

👉 The Azure VM types that are typically selected to run CockroachDB nodes are eligible for live migration during maintenance. Azure chooses the update mechanism that's least impactful to customer VMs. Since uninterrupted clock is not available to CockroachDB VMs in Azure, operators must disable live migrations for all CockroachDB VMs.

✅ CockroachDB operators are advised to monitor the upcoming maintenance notifications and manually start maintenance of affected CockroachDB VM during a self-service window. Operators can guarantee non-disruptive applications connections failover and failback by orderly stopping CockroachDB node and ensuring only one node is down for maintenance at a time.

VMware VSphere Special Provisions

✅ Follow detailed instructions in the CockroachDB on VMware vSphere white paper.

It’s time for resilience and high performance

Precise clock management is a foundational requirement for maintaining the correctness, performance, and reliability of CockroachDB in distributed environments. By following the best practices outlined in this article — from selecting the right NTP client and configuring regional time sources, to ensuring clock continuity during live migrations — organizations can significantly reduce the risk of transaction retries, node instability, and stale reads.

The result: A more resilient and performant CockroachDB deployment, empowering teams to deliver consistent and reliable applications, even in complex, multi-cloud infrastructures.

Ready to experience CockroachDB's precision timing for yourself? You can get hands-on with CockroachDB’s free cloud offering today.

Alex Entin is Senior Staff Sales Engineer for Cockroach Labs.