It is essential for today’s mission-critical applications to operate without interruptions, such as online banking systems that handle millions of transactions daily or healthcare systems managing real-time patient data. In these verticals and many more, downtime or data loss can result in significant financial and reputational damage, such as lost revenue from disrupted transactions, penalties for regulatory non-compliance, and erosion of customer trust due to perceived unreliability, making resilience and availability paramount.

CockroachDB — a horizontally and vertically scalable SQL database that is highly resilient, scalable, and easy to manage, with built in data-governability all while remaining transactionally consistent — is designed to address these challenges by ensuring continuous access to data, even in the face of hardware failures, network issues, or regional outages. Built with a PostgreSQL wire-compatible interface, CockroachDB is easy to adopt, leveraging your organization’s existing SQL skills.

Additionally, CockroachDB is designed to protect against cloud vendor lock-in by employing a distributed architecture that allows seamless data replication and deployment across multiple cloud providers and/or your own infrastructure. This architecture also enables flexible topology modifications through automated rebalancing and replication processes, minimizing downtime and avoiding risky migrations.

CockroachDB has been around for more than 10 years, leading the way in the distributed SQL space. It’s a mature, time-tested solution for managing distributed data that meets any application’s geographic governance or performance requirements. Delivered to market either as a cloud solution or self-hosted, customers have the flexibility to run CockroachDB in the way that makes the most sense for their specific use case or application.

This article examines CockroachDB from the perspective of enterprise architectural attributes to help an Architect, Application Developer, and Database Administrator better understand how CockroachDB can affect their organization.

There are a variety of reasons to use CockroachDB. Here are some reasons may want to modernize your database, or consolidate your databases on CockroachDB to lower your TCO:

Your application is mission critical and neither planned nor unplanned outages are acceptable

Your application is not mission critical and you would like to reduce idle hardware and maintenance operations.

You want to reduce the amount of specialized databases to a versatile general purpose database

Your organization cannot accept the risk of data loss

Deployment flexibility is required to expand into different regions or support different compliance rules, without the need for expensive and timely re-implementation efforts

Your application is currently global or will need to be

You don't want your application to be limited to just one-cloud

You need greater scale and flexibility and don't want to learn new database skills beyond SQL

Typical Workloads

Modernizing your database is a progressive project, often happening over a period of time rather than all-at-once. We’ve found that companies looking to modernize their databases do so by ‘“workload’. Typical workloads they start with are often mission-critical, transactional workloads – those with the highest availability needs that require transactional consistency, including:

User accounts and metadata

eCommerce

Banking and wallets

IoT and device management

Gaming

Routing and logistics

Generative AI

If any of the above use cases or workloads apply to your organization read on! Let's dive into some of CockroachDB's critical architectural attributes: Operational Excellence, Security, Reliability, Performance, and Cost-Efficiency. In subsequent series installments, we’ll dive deep into each of these areas with illustrative examples.

1. Operational Excellence

Operational excellence is a critical consideration when considering a database: It ensures that the new system performs efficiently, reliably, and consistently while minimizing risks, disruptions, and operational overhead.

Database migrations are complex processes, and without a focus on operational excellence, organizations can face performance bottlenecks, downtime, increased costs, and team inefficiencies. One thing to consider is that you are more likely to successfully implement and continue successful operation of a database when it has been created with an operational excellence perspective.

Achieving Operational Excellence for a mission-critical database requires support for the following six components:

Automated Operations/Simplified Operations: Stitching together a patchwork of tools and environments to improve scale and availability or learning a new set of database skills introduces architectural complexity and brittleness. If you have to make a change in your database, and then make a matching change in your database replication tool just to modify your deployment topology, the risk exists that something will get missed – resulting in operational errors or at the very least deployment delays. In contrast, CockroachDB simplifies database operations by automating common manual tasks such as sharding, data rebalancing, data replication, and fault recovery enabling database operations teams to focus on higher-value tasks.

For example, CockroachDB supports implementing a new data sovereignty rule to restrict data placement for either a table or a database to a specific constraint through the execution of a database SQL ALTER command specifying the location. Upon executing the ALTER command CockroachDB begins moving/replicating the data through the cluster automatically based on the new rule without having to define a migration work process or experience an application outage.

Enterprise Scalability: Most enterprise class databases support only vertical scalability, which limits the database’s max capacity. The common solution to the vertical scaling limit is to either migrate to a larger database server, shard the database across multiple servers, or establish read replicas. None of these solutions provide the flexible scalability required for most enterprises.

Multi-Environment Deployment: Today’s IT landscape is no longer defined on a single data center or single cloud provider; enterprise applications need to run hybrid spanning multiple cloud vendors and even on-prem environments. The specific deployment environment is determined by the application’s specific security requirements. In addition, deployment decisions are also influenced by customer stakeholder requirements pertaining to the cloud vendors they are willing to deploy to, as well as locality requirements.

When selecting an enterprise database the ability to deploy within multiple environment types (Hybrid or Cloud) for a single database is becoming increasingly critical. It is also crucial to be able to use the same deployment configuration, regardless of the environment.

To deploy nodes for a CockroachDB cluster, deploy the cockroachDB binary file to a server, virtual machine, cloud instance, and/or Kubernetes Cluster and enable the new node to listen so the new node can join into the cluster. The CockroachDB cluster’s configurations will automatically become available to the new node without requiring manual configuration and data rebalancing will automatically begin. Consequently, you can start out with a single region CockroachDB topology and later expand into a new/different cloud on a different data center, cloud region, or even a different cloud provider supporting a multi-region topology without a complex migration.

Fault Tolerant Operations: Mission critical outages (network, disk issues, etc.) seem to always take place at 2 AM . To resolve the outage requires calling the database and application administrators as well as other relevant stakeholders to jump on a “red-team” call while they are half awake. The net effect is delays in restoring operations, resulting in higher RTOs and a fatigued team struggling to restore the system. A better strategy is to ensure the database supports Fault Tolerant Operations, enabling the system to continue operating without operation even if resources are completely lost (NOTE: Most organizations will balance cost vs. availability which will affect the degree of Fault Tolerant Operations).

CockroachDB supports Fault Tolerant Operations by spreading the responsibilities of what is commonly placed in one master node across all the nodes in the CockroachDB cluster, reducing blast failure zones and reducing the amount of data to replicate when recovering a master is required. Further, by maintaining three or more data replicas so long as the outage event maintains quorum, CockroachDB will continue running without interruption – allowing more sleep for the team who normally would get called in at 2 AM to restore the system, and instead resolve the root cause issue during normal work hours when everyone is available and rested.

Version Upgrades, Server Maintenance, and Schema changes: Planned outages to support activities such as version upgrades, server, and schema changes in most organizations represents the most number of outages for the typical enterprise. In addition, to the inconvenience of outages, managing planned outages often require additional resources to support the creation of complex project plans covering all the steps to execute during the planned outage, as well as the effort to coordinate with all the internal and external stakeholders. While these outages used to be acceptable though unpleasant, they are becoming less and less acceptable for both internal and external stakeholders as more and more applications can no longer accept any outage.

Just as CockroachDB supports Fault Tolerant Operations, executing version upgrades, and server maintenance is possible through rolling upgrades. If three replicas are configured for your database/cluster, then you can bring down one node at a time for a rolling upgrade; so long as quorum is maintained. If five replicas are configured for your database/cluster, then you can bring down two nodes at a time (three copies are still remaining so the quorum is preserved). If you wish to apply a schema change and you are adding (not deleting) schema elements, then because of Multi-Version Concurrency control (MVCC) where each data version is preserved in the table, you can apply {RUN-ON} schema changes without forcing your applications to experience an outage.

Flexible Observability: Without proper visibility into database operations, teams face difficulty detecting and diagnosing issues such as slow queries, resource bottlenecks, or unexpected failures, which can result in downtime, degraded user experience, and lost revenue.

Effective monitoring and observability for a distributed SQL database require a comprehensive set of features and metrics to ensure performance, reliability, and scalability across a complex, multi-node architecture. Key metrics include latency (query execution times, read/write delays), throughput (transactions per second, queries per second), and error rates (failed queries, timeouts) to track system responsiveness and reliability.

Resource utilization metrics, such as CPU usage, memory consumption, disk I/O, and network throughput, are critical for identifying bottlenecks and ensuring efficient allocation of resources. Additionally, observability tools must capture query performance metrics, such as slow queries, contention, and retries, along with insights into data replication lag and node health to identify inconsistencies or failures. Most importantly, all of these metrics need to be presented on a single pane of glass and be compatible with the enterprise’s observability and monitoring tools.

CockroachDB delivers enterprise-grade observability and monitoring with seamless integration into popular tools like Prometheus and Grafana, Datadog, New Relic, and DB Marlin. The DB Console provides real-time insights into query performance, contention, replication status, and resource usage across its distributed architecture. Support for distributed tracing via OpenTelemetry allows teams to track queries across nodes, while robust logging and alerting enable quick detection and resolution of anomalies. These capabilities ensure high availability, optimized performance, and reliability for mission-critical applications. Compatibility with common observability and monitoring tools alongside support for open protocols such as Open Telemetry enables customers to get their clusters under monitoring with existing skills.

2. Security

For enterprise applications, data security isn’t optional — it’s mandatory. CockroachDB embeds security principles deeply within its architecture:

Encryption by Default: Encryption by default ensures that all data — at rest and in transit — is protected automatically, reducing the risk of breaches caused by misconfiguration or oversight. Even if attackers access the database, encrypted data remains unreadable. This approach simplifies compliance with regulations like GDPR and HIPAA, while securing sensitive information such as PII and financial data. By embedding encryption from the start, enterprises gain a stronger security posture with minimal operational overhead.

Enterprises need comprehensive security that includes encryption at rest and in transit, role-based access control (RBAC), and automated key management to enforce strong data protection without manual intervention. Integration with enterprise tools like Key Management Systems (KMS), audit logging, and minimal performance impact are essential. The ideal database combines these features out of the box, ensuring robust protection while simplifying operations.

CockroachDB secures data with encryption by default, using AES-256 for encryption at rest and TLS for encryption in transit, ensuring all data remains protected. It integrates seamlessly with enterprise-grade Key Management Systems (KMS) for automated key handling, eliminating manual processes and reducing risks. By providing built-in encryption and security, CockroachDB ensures enterprises can protect mission-critical data efficiently and comply with security standards.

Fine-Grained Access Controls: Fine-grained access controls, such as RBAC, are essential for enterprise databases to enforce the principle of least privilege, ensuring users and applications have only the permissions they need. By limiting access to specific roles and actions, RBAC reduces the risk of unauthorized data exposure, accidental data loss, or malicious activities. This granular control simplifies user management, especially in large organizations with complex access requirements, while helping to meet requirements from regulations like GDPR, HIPAA, and SOC 2. Fine-grained controls also enhance operational efficiency by centralizing permission management, allowing administrators to maintain security without adding manual overhead.

Enterprises should prioritize databases with robust RBAC that enables fine-grained management of permissions, including read, write, and admin operations at the database, table, and row levels. Additionally, security solutions should integrate seamlessly with enterprise identity management systems, such as LDAP, Active Directory, or Single Sign-On (SSO), to streamline authentication and role assignment. It’s crucial to have audit logging for visibility into user activity and policy enforcement to detect unauthorized access attempts. The ideal solution should make it easy to manage complex user roles while ensuring consistent, scalable security across distributed environments.

CockroachDB provides fine-grained access controls through its robust RBAC system, enabling administrators to define roles and permissions at the database and table levels. By assigning roles to users, CockroachDB ensures that only authorized individuals can access or modify specific data, aligning with the principle of least privilege. It supports integration with enterprise identity management systems for centralized authentication and simplifies permission management across distributed clusters. Additionally, CockroachDB includes audit logging, giving enterprises visibility into user activity to monitor access and ensure compliance. With its granular, scalable access controls, CockroachDB helps enterprises secure data while maintaining operational simplicity.

Data Sovereignty Regulations: Data sovereignty is critical for enterprises operating across regions with strict regulations like GDPR, which require data to be stored and processed within specific geographic boundaries. Enterprises which are non-compliant and store regulated data in the wrong countries and/or states expose themselves to legal risks and penalties as well as reputational damage with their customers. By enabling fine-grained control over where data resides, enterprises can align with jurisdictional requirements without compromising performance or availability.

Enterprises need to prioritize databases that offer geo-partitioning and granular control over data placement across regions. This includes the ability to isolate data at the row and or table level and enforce location-based policies seamlessly. A database should achieve this while maintaining low-latency access and high availability for global workloads. The geo-parationing configuration also needs to be simple enough to be auditable and easy for a DBA to define.

CockroachDB delivers data sovereignty through its powerful ALTER statement-based geo-partitioning capabilities, allowing enterprises to pin data to specific regions at the row level. This way a DBA leveraging ALTER commands can fine-grain describe geo-partioning rules without learning another data language. This helps to comply with regional regulations while optimizing performance for local access.

Comprehensive Audit Trails: Comprehensive audit trails are critical for enterprises to comply with regulations such as HIPAA, GDPR, and SOC 2, which mandate transparency and accountability in handling sensitive data. Audit trails track who accessed, modified, or queried data, providing an immutable record for investigations, audits, and compliance reporting. Without proper audit logging, enterprises risk non-compliance, fines, and damage to their reputation. By ensuring a clear record of all database activities, audit trails help organizations maintain regulatory compliance, detect anomalies, and build trust with customers and stakeholders.

Enterprises need audit trails that capture who, what, and when actions occurred, including reads, writes, and permission changes. Audit logs need to be tamper-proof, highly detailed, and exportable or integrate with the enterprise's existing security information tools (SIEM). Enterprises should look for solutions that provide real-time monitoring and retention policies for long-term storage, ensuring compliance with regional regulations. Additionally, logs must be granular enough for forensic audits while maintaining performance across large-scale, distributed workloads.

CockroachDB delivers robust audit logging to help enterprises meet compliance requirements for regulations like HIPAA, GDPR, and SOC 2. It captures SQL activity, including data reads and writes, user logins, and role or permission changes, enabling full visibility into database operations. Logs are secure, immutable, and can be seamlessly integrated with external systems for further analysis or retention. By providing detailed and actionable audit trails, CockroachDB ensures enterprises have the transparency and traceability needed to comply with government and financial regulatory requirements while maintaining operational efficiency and avoiding fines as well as legal actions.

3. Resilience

Enterprises must prioritize resilience for mission-critical databases to ensure continuous availability and protect against downtime, data loss, and operational disruptions. Even brief outages, planned or unplanned, can lead to financial loss, regulatory penalties, and damaged customer trust, especially in industries like finance, healthcare, and retail.

A resilient database maintains high availability through automated recovery (such as with the Raft protocol used in CockroachDB), fault tolerance, and disaster recovery capabilities. This ensures consistent performance during hardware failures, software issues, or regional outages.

By delivering continuous uptime while protecting transactional data integrity, resilient databases enable businesses to meet SLAs, maintain compliance, and ensure uninterrupted operations. In addition to safeguarding revenue and customer experiences, these capabilities defend enterprises against data loss.

State of resilience

To learn more about resilience, we interviewed 1,000 senior technology leaders and gained deep insight into what executives think when they consider the strategies, priorities, and vulnerabilities facing their organizations.

Read that research to learn some interesting facts about resilience:

On average, organizations are experiencing a staggering 86 outages each year

79% of companies admit they're not ready to comply with new operational resilience regulations like DORA and NIS2

95% of executives surveyed are aware of existing operational vulnerabilities, but nearly half have yet to take action

With the pressure that executives are under to harden their infrastructures, what are the key elements that enable CockroachDB’s resilience?

Key architecture elements of a resilient database

Some of the key architectural features to consider for a modern resilient enterprise database include the following:

Distributed SQL Architecture: Planned and Unplanned outages which once upon a time were acceptable facts of life are no longer acceptable. Application failures become news headlines, while customers and partners are not hesitant to seek alternatives when outages occur. To avoid planned and unplanned outages across multiple geographies, an enterprise needs to look for a solution with high availability, fault tolerance, and automatic failover capabilities across distributed regions. The database should support multi-region deployments with features like built-in data replication to ensure resilience against availability zone and regional failures.

To reduce planned downtime, the system should allow for rolling upgrades and maintenance without impacting availability. Additionally, it’s important to select a database that integrates seamlessly with your organization’s existing technical infrastructure and supports standard tools, languages, and protocols (e.g., SQL). This ensures teams can leverage their current technical skills and tools without steep learning curves, which accelerates adoption and reduces operational complexity while maintaining consistent uptime and performance.

CockroachDB delivers enterprise-grade data resiliency through its distributed SQL architecture, which ensures high availability and fault tolerance across data centers, availability zones, and cloud regions. By replicating data automatically across multiple nodes, CockroachDB can tolerate node, hardware, or regional failures without data loss or downtime. Its automated recovery and self-healing capabilities enable continuous operations, while geo-replication and multi-active availability ensure low-latency access and resilience for global workloads.

This architecture eliminates single points of failure, providing enterprises with a robust, resilient database that protects mission-critical data and ensures uninterrupted performance.

Multi-region complex architectures defined with simple SQL Statements.

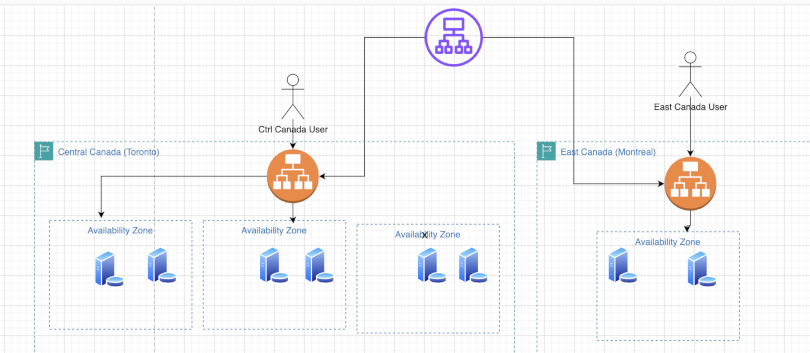

Multi-Availability Zone and Region Replication: Deploying a database across multiple availability zones (AZs) and multi-region infrastructure enhances mission-critical resilience by ensuring high availability and fault tolerance.

In a multi-AZ setup, automated failover protects against data center or infrastructure failures by redirecting traffic to healthy nodes, minimizing downtime. Multi-region deployments provide additional protection against large-scale outages, natural disasters, or network disruptions by replicating data across geographically distributed regions. This approach ensures redundancy, fast recovery, and continuous uptime, while geo-partitioning keeps data close to users for optimal performance. Together, these strategies safeguard mission-critical systems against failures and ensure uninterrupted access. To improve mission-critical database resiliency, enterprises should prioritize multi-availability zone (AZ) and multi-region replication features. Key capabilities include:

synchronous replication across AZs (or even two cloud providers) for zero data loss,

asynchronous or semi-synchronous replication across regions for geographic redundancy, and

automated failover to ensure continuous availability during failures.

Features like geo-partitioning allow data to be stored close to users, reducing latency, while maintaining redundancy.

The database should also provide strong consistency guarantees to prevent stale reads and include self-healing mechanisms to repair and rebalance data automatically. These features collectively ensure high availability, fault tolerance, and data integrity for mission-critical workloads. CockroachDB enhances mission-critical resiliency with multi-availability zone (AZ) and multi-region capabilities through its distributed SQL architecture. It automatically replicates data across multiple AZs within a region using synchronous replication, ensuring zero data loss and transactional consistency, while delivering seamless failover during infrastructure failures.

For multi-region deployments, CockroachDB supports geo-replication and geo-partitioning, enabling data to be distributed across regions for fault tolerance while keeping critical data close to users to minimize latency. Built-in automated failover and self-healing mechanisms ensure continuous availability and recovery without manual intervention, delivering strong consistency and high uptime for mission-critical workloads across AZ’s or even cloud providers.

Active Cluster Replication: Enterprise databases can only provide resiliency when durable replication exists for all of the possible failure scenarios. For example, maintaining a database replica within the same data center or availability zone as the primary database is great only if the failure scenario does not damage or make unavailable (i.e. network failure) the replica copy.

Furthermore, many enterprises have leveraged read replicas as a mechanism to spread the read load increasing database read operation capacity only to find that if the master database server fails that substantial time is required to promote the read replica. Additionally, in the read replica scenario, data loss is possible from the moment the last replica operation completed and the failure event. To address the risk of master node outage or data loss, an enterprise considering a new database for a mission critical application needs Active Cluster data replication. Active Cluster replication can be found in either Active-Active data replication or (3+) Active data replication topologies.

The multi-active replication strategy requires a minimum of three copies and provides RPO=0 and RTO<5 seconds operations on failure, ensuring that the mission-critical application continues running for different scenarios.

Further, consideration should be given for how to spread the replicas across data centers, availability zones, and/or regions. The greater the region of spread between replicas the greater resiliency you can provide to your mission-critical application, though possibly with the tradeoff of network latency. Multi-active replication is generally the best way to provide resilience measured in terms of RPO and RTO.

The Active-Active topology makes sense for organizations which can tolerate a warm standby on failure configuration. The Warm standby has just enough capacity to provide support for a base capacity workload until additional resources can be available. The advantage of the warm standby though is cost – you do not need to pay for idle resources to support quorum replicas nor full production workloads.

CockroachDB provides both multi-Active data replication and Multiple Active configurations. This allows the enterprise to choose the business appropriate balance between highest resiliency level and network latency, in order to optimize costs vs. value for specific workloads.

4. Performance

Enterprise applications demand both scale and speed. A mission-critical, enterprise-scale database must deliver consistent, high performance to meet the demands of large-scale applications and workloads. Enterprises require low latency for real-time query processing, high throughput to handle massive transaction volumes, and the ability to scale horizontally as data and user demands grow. Predictable performance is essential under varying workloads, ensuring that spikes in traffic or complex queries do not degrade system responsiveness. Specific areas to consider include:

Elastic Scalability: Elastic vertical and horizontal scalability is critical for achieving high performance in enterprise databases, as it allows the system to dynamically scale resources up or down in response to changing workloads without an outage. By distributing data and queries across multiple nodes, elastic scalability ensures consistent low latency and high throughput even as transaction volumes grow. This capability prevents bottlenecks, minimizes contention, and enables the database to maintain predictable performance under varying demands without costly overprovisioning.

Enterprises should evaluate elastic scalability based on the database’s ability to scale horizontally or vertically across nodes without downtime, reconfiguration, or performance degradation. Key considerations include automated data balancing, linear performance improvements as nodes are added, and efficient resource utilization across regions. Additionally, enterprises should test the database’s ability to handle spikes in traffic, ensure minimal latency under heavy loads, and seamlessly scale back during quieter periods to optimize infrastructure costs.

CockroachDB achieves elastic scalability through its distributed SQL architecture, which enables vertical scaling as well as horizontal scaling across nodes – with minimal operational effort nor an outage. It automatically distributes data and workloads, balancing load to eliminate hotspots and maintain high throughput. As demand increases, new nodes without additional configuration can be added seamlessly without downtime, while CockroachDB ensures performance remains predictable. This elasticity allows enterprises to handle growing workloads, accommodate traffic spikes, and maintain low-latency access across regions, delivering reliable performance at scale.

Optimized Worldwide Access: Optimized access across multiple geographies ensures that enterprise databases deliver low latency and high throughput for globally distributed applications. By strategically placing data close to users in different regions, performance bottlenecks caused by long network round trips are minimized. This geographic optimization is critical for maintaining a responsive user experience and supporting mission-critical workloads, as it balances global consistency with fast, localized access to data.

Enterprises should evaluate multi-region database performance by assessing features like geo-partitioning, which allows data to be colocated with users, and latency optimization for queries across nodes distributed between data centers, cloud availability zones, and cloud regions. The database should minimize data movement while ensuring global consistency and high availability. Organizations must also test how the system handles regional failures, replication delays, and scalability across multiple geographies to ensure uninterrupted throughput and predictable performance.

CockroachDB optimizes performance across multiple geographies through its geo-partitioning and multi-active availability features, which allow data to be placed close to users while maintaining global consistency. It automatically replicates and balances data across regions to minimize latency and ensure high consistent throughput, even as workloads scale. By intelligently routing queries to the nearest node and providing seamless failover in case of regional disruptions, CockroachDB delivers exceptional performance and resilience for globally distributed applications.

Intelligent Query Optimization: Without optimization, queries are run in their raw form, which can result in suboptimal execution plans, such as scanning entire tables, inefficient joins, or poor use of indexes. This leads to higher latency, increased resource contention, and slower response times, especially as data size and query complexity grow. A query optimizer analyzes the structure of a query, data distribution, and available indexes to determine the most efficient execution path, minimizing computation and I/O. For mission-critical applications, an optimizer is essential to maintain consistent performance, scalability, and reliability, ensuring the database can handle large-scale, complex workloads without manual intervention or costly bottlenecks.

When selecting a mission-critical enterprise database, enterprises should consider a query optimizer with features that ensure efficient, reliable performance at scale. Key capabilities include cost-based optimization (CBO), which evaluates multiple execution plans and selects the most efficient one using accurate statistics on data size, distribution, and indexes. The optimizer must dynamically adapt to workload changes and data growth without requiring manual intervention. It should handle complex queries involving joins, filters, and aggregations while minimizing resource consumption and query latency. Finally, the optimizer should operate automatically out of the box, reducing operational overhead and ensuring consistent, high performance for mission-critical workloads.

CockroachDB enhances query performance for mission-critical applications using its cost-based optimizer, which analyzes SQL queries and selects the most efficient execution plan. It leverages automatically collected statistics — such as row counts, data distribution, and index usage — to minimize resource usage and query latency. The optimizer considers factors like data locality and distribution to reduce network hops, ensuring efficient query execution across CockroachDB's distributed SQL architecture. By automating optimization and adapting to changing workloads, CockroachDB delivers consistent, high-performance query execution for complex transactions and real-time analytics.

Advanced Indexing: Without optimal indexing, enterprise database performance degrades as queries rely on full table scans, increasing SQL latency and increased resource consumption. Complex operations like filters, joins, and sorting become slow, under heavy workloads leading to query bottlenecks. In distributed systems, inefficient access patterns cause unnecessary data movement, further impacting scalability and response times. This ultimately leads to not meeting targeted SLAs, reducing user experience and increased infrastructure costs.

To address this dystopian result, modern enterprise databases supporting both structured and unstructured data requires advanced indexing features to ensure efficient performance and scalability. Key indexing capabilities offered by enterprise databases include primary and secondary indexes for structured data, multi-column indexes for complex queries, and inverted indexes to enable fast searches within JSON, arrays, and nested data. Features like partial indexes and the STORING option optimize storage and performance by indexing subsets of data and eliminating table lookups. For distributed systems, support for global and local indexes ensures efficient data access across nodes. Automatic index maintenance and adaptive indexing further reduce manual effort and optimize performance over time. These capabilities collectively enable fast, scalable querying of diverse workloads across structured and unstructured datasets.

CockroachDB ensures better performance for both structured and unstructured data through advanced indexing strategies. It uses primary and secondary indexes for efficient lookups, multi-column indexes to optimize queries with filters and joins, and partial indexes to target frequently accessed data subsets. For unstructured JSONB data, CockroachDB employs inverted indexes for fast searches and filtering. Additionally, the STORING clause allows extra columns to be stored in indexes, reducing table lookups. By automatically maintaining and leveraging these indexes, CockroachDB minimizes latency and resource usage, delivering optimized query performance at scale.

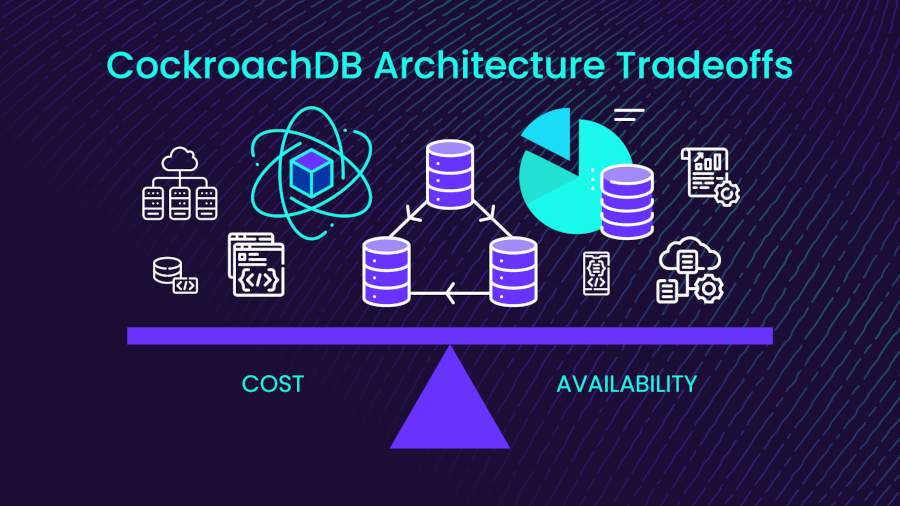

5. Cost optimization

Cost optimization is crucial for a mission-critical enterprise database because it ensures the database delivers high performance, availability, and scalability while maintaining economic efficiency. Mission-critical systems require robust infrastructure to handle large-scale workloads, ensure high availability, and meet stringent SLAs. However, without cost optimization, over-provisioning of resources can lead to unnecessary expenses, while under-provisioning risks performance bottlenecks, downtime, and data loss — potentially disrupting operations and damaging customer trust.

As enterprises scale, managing costs without compromising on performance becomes critical. CockroachDB enables cost optimization through:

Commodity Infrastructure: Historically, enterprise mission-critical systems relied on proprietary and expensive database infrastructure, requiring specialized hardware, costly licenses, and vendor support to meet performance and reliability demands. These vertically scaled systems were expensive to procure, difficult to scale, and prone to single points of failure, resulting in high capital and operational costs. Scaling required purchasing larger servers, which lacked flexibility and became cost-prohibitive as data volumes grew. Vendor lock-in further limited choice and agility. These challenges highlighted the need for modern, distributed databases that use commodity infrastructure to deliver scalability, resilience, and cost efficiency for today’s dynamic, global workloads.

Using commodity infrastructure improves cost optimization by enabling enterprises to run databases on affordable, widely available hardware instead of expensive, proprietary systems. It allows for horizontal scaling by adding low-cost nodes, achieving high availability and fault tolerance without specialized hardware. Additionally, commodity infrastructure offers flexibility for multi-cloud or hybrid deployments, ensuring cost-effective scaling and reducing operational expenses while maintaining performance and resilience for mission-critical workloads.

CockroachDB optimizes costs for mission-critical enterprise systems by leveraging commodity hardware and its distributed SQL architecture to enable horizontal scaling across low-cost servers. This eliminates the need for expensive, proprietary hardware and allows incremental scaling as workloads grow. Built-in fault tolerance and automatic data replication ensure high availability, even if individual servers fail, reducing failover costs. CockroachDB also supports multi-cloud and hybrid deployments, enabling the use of cost-effective infrastructure from providers like AWS (Intel- and Gravitron-based instance types), GCP, or Azure. With automated operations like backups, load balancing, and rolling upgrades, CockroachDB minimizes administrative overhead while delivering performance, resilience, and cost efficiency.

Improved Process Efficiency: Enterprise database management involves labor-intensive activities that drive up costs and consume valuable resources. Tasks like manual database sharding, server repaving, and schema changes require significant time and expertise to implement and maintain. Capacity planning, performance tuning, and managing backups or disaster recovery demand ongoing effort, often leading to resource inefficiencies. Additionally, patch management, upgrades, and failover processes require coordination, testing, and downtime to ensure stability. Continuous monitoring and troubleshooting further increase operational overhead. By streamlining and automating these processes, organizations can reduce labor costs, minimize errors, and allow database administrators to focus on higher-value tasks like innovation, optimization, and strategic initiatives, improving overall efficiency and reliability for mission-critical systems.

When evaluating a mission-critical database, enterprises should prioritize labor-saving features that reduce manual effort and operational overhead. Key capabilities include automatic sharding and rebalancing to manage data growth, automated failover and recovery for high availability, and zero-downtime upgrades to simplify patching. Features like elastic scaling eliminate complex capacity planning, while self-healing resolves common issues without manual intervention. Additionally, automated backups, integrated performance monitoring, and online schema changes streamline maintenance and tuning. These capabilities free up database administrators to focus on higher-value initiatives, improving efficiency, reliability, and scalability for mission-critical workloads.

CockroachDB provides labor-saving features that optimize costs by automating traditionally manual and resource-intensive database management tasks. It offers automatic sharding and rebalancing, distributing data seamlessly across nodes as workloads grow, eliminating the need for manual intervention. Built-in fault tolerance and automated failover ensure high availability, recovering from node or regional failures without operator involvement. Rolling upgrades and automated patching allow for zero-downtime updates, reducing planned maintenance efforts. CockroachDB’s self-healing capabilities detect and resolve issues like node failures automatically, minimizing troubleshooting. Additionally, features like automated backups, online schema changes, and integrated performance monitoring simplify operations, reducing the need for manual tuning and management. By automating these tasks, CockroachDB lowers administrative overhead, improves efficiency, and allows enterprises to control costs while maintaining mission-critical reliability and performance.

Usage-Based Scaling: Historically, enterprise databases were provisioned with fixed, over-provisioned resources to handle peak workloads, resulting in costly idle capacity during low-usage periods. This inefficiency led to high infrastructure costs and underutilized hardware, limiting an enterprise's ability to affordably grow capacity. Usage-based scaling strategies solved these problems by enabling databases to scale resources dynamically based on actual daily, weekly, and seasonable demand patterns. Instead of paying for unused capacity, enterprises can scale horizontally during traffic spikes and scale back during idle periods, optimizing resource utilization and reducing costs. This approach ensures performance and availability while aligning infrastructure expenses with real workload needs.

When evaluating a mission-critical database, enterprises should prioritize usage-based scaling for performance and cost efficiency. Key features include:

horizontal scalability to add or remove nodes dynamically

automatic workload balancing to prevent hotspots

and elastic capacity management for seamless compute and storage scaling

The system should align costs with actual demand through pay-as-you-go efficiency and support multi-region scalability for low-latency access. Importantly, scaling must be responsive, ensuring resources scale up quickly during demand spikes (e.g., work hours) and decommission smoothly during low usage, with no data loss or outages. These capabilities optimize performance, control costs, and ensure resilience for mission-critical workloads.

CockroachDB: No compromises

CockroachDB delivers operational excellence by automating critical database tasks such as sharding, rebalancing, backups, and rolling upgrades, reducing manual effort and minimizing downtime. Its self-healing capabilities ensure high availability by automatically detecting and recovering from node or regional failures. With built-in observability tools and performance monitoring, CockroachDB simplifies operations, enabling database teams to focus on higher-value initiatives while maintaining reliability and efficiency.

In terms of security, CockroachDB provides encryption by default for data at rest and in transit, role-based access control (RBAC), and audit logging to meet stringent compliance requirements from regulations such as GDPR and HIPAA.

For resiliency, its distributed SQL architecture ensures fault tolerance and continuous availability through automated replication and failover, even across multi-region deployments. CockroachDB delivers performance with intelligent query optimization, efficient indexing strategies, and geo-partitioning to minimize latency and ensure fast, consistent access to data globally.

Lastly, it achieves cost optimization by leveraging commodity hardware, elastic scalability, and automated operations to align infrastructure costs with demand. The result: CockroachDB ensures that enterprises achieve efficiency – without compromising performance or reliability.

Ready to learn how CockroachDB can accelerate your data modernization initiative? Visit here to talk to an expert.

Mark Johnson is Senior Manager, Sales Engineering, for Cockroach Labs.