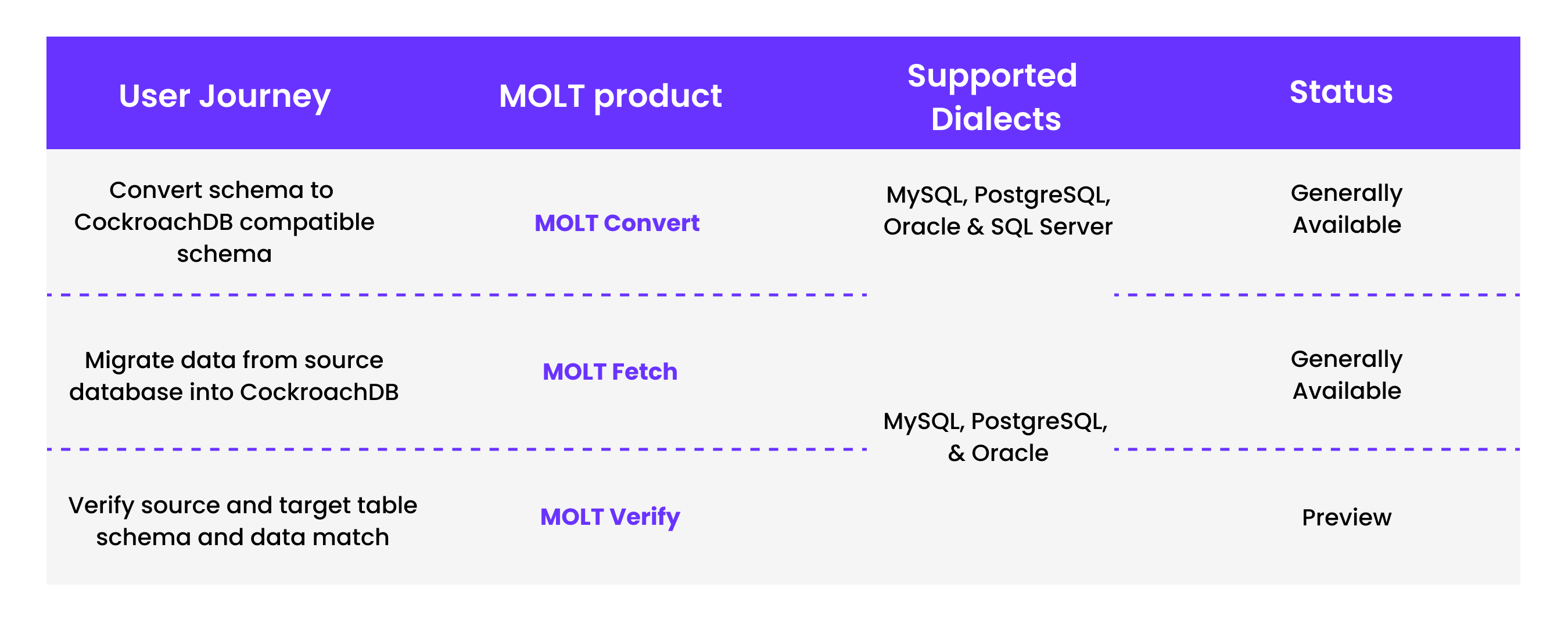

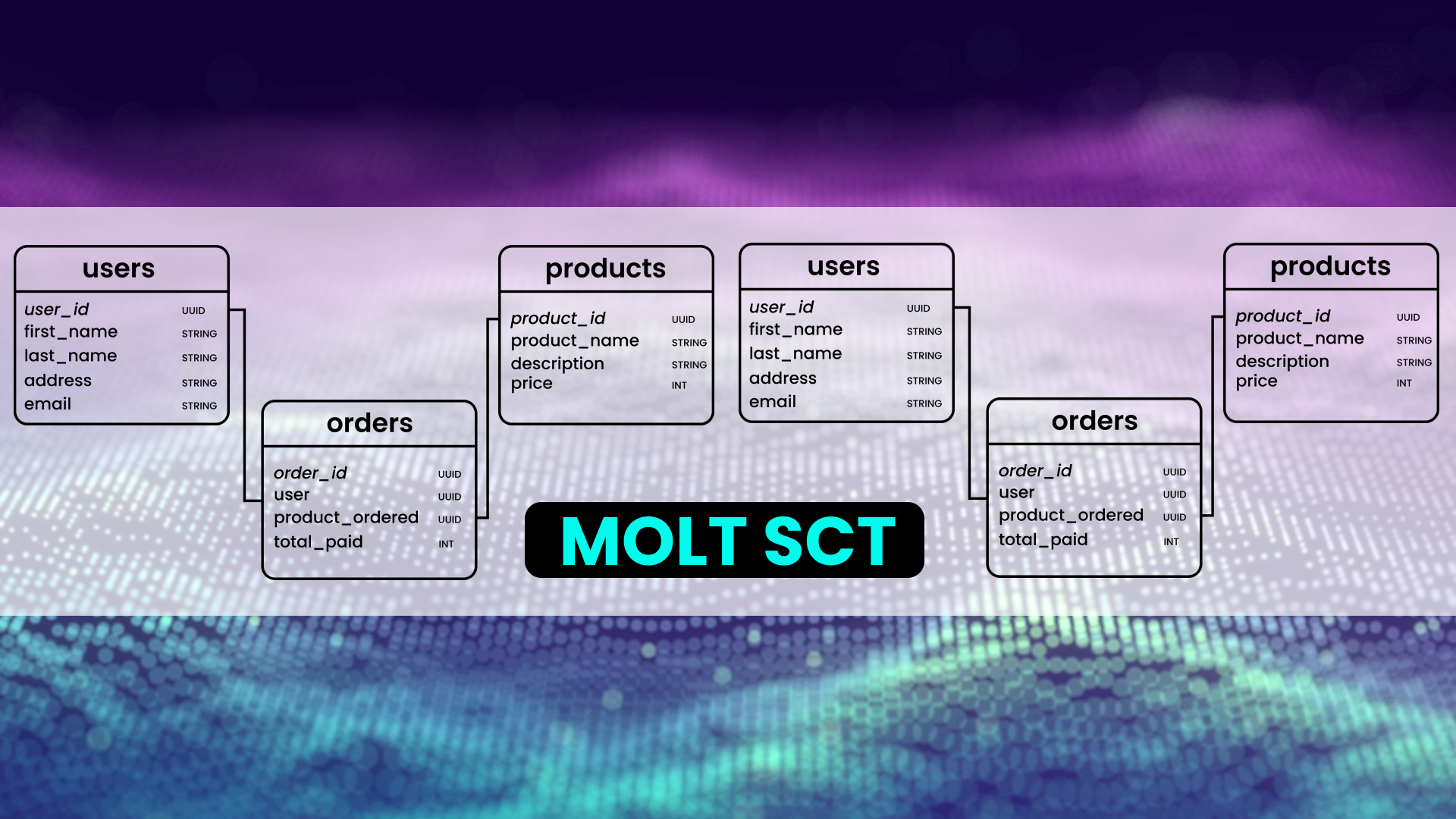

Database migrations are complex, and ensuring the migrated data is 100% correct is critical. After all, a migration isn’t over until you feel confident and comfortable cutting over to the new infrastructure. To streamline migrations, Cockroach Labs created the MOLT (Migrate Off Legacy Technology) toolkit, which includes three tools that improve different parts of the user journey.

Ultimately, we built MOLT Verify to give engineers confidence when moving data from legacy databases to CockroachDB. In this deep dive, we’ll explore what MOLT Verify is, how it works under the hood, and how to use it effectively for seamless migrations. We’ll cover its role in the migration process, the architecture and integration with databases, how it verifies data consistency, and best practices for engineers.

To learn more about the rest of the MOLT tools, check out our blog on MOLT Convert (previously MOLT SCT), and our colleagues’ post on MOLT Fetch.

What is MOLT Verify?

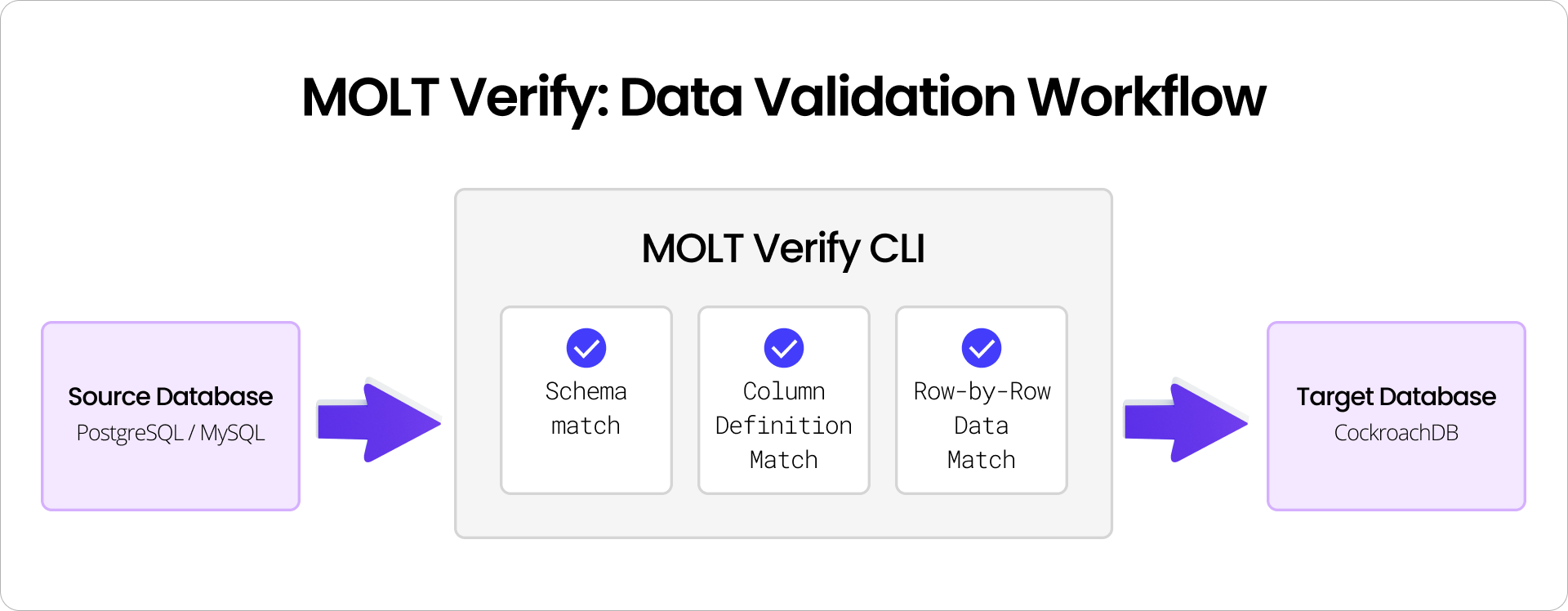

MOLT Verify is a data validation tool in Cockroach Labs’ migration toolkit. Its primary role is to compare a source database (e.g. PostgreSQL or MySQL) against a CockroachDB target to ensure that everything matches after migration. MOLT Verify automatically compares the source and target databases at multiple levels—schema and row-level data validation. This prevents the risk of silent corruption, missing records, or mismatches, and offers an automated, scalable, and repeatable way to validate database migrations in minutes instead of days.

The tool performs a series of checks so you feel confident that your new CockroachDB cluster faithfully mirrors the original database:

Table verification: It confirms that each table in the source has a corresponding table in the target with the same schema definition (table name, number of columns, etc.)

Column definition verification: For each table, it validates that column names, data types, constraints, nullability, and other attributes match between source and target. This catches any schema drift or type conversion issues from the migration.

Row data verification: It compares the actual data in each table, row-by-row, to ensure every value in the source appears identically in CockroachDB.

Supported databases

MOLT Verify currently supports verifying data from PostgreSQL, Oracle, and MySQL sources (as well as CockroachDB itself) against a CockroachDB cluster – covering the most common migration paths. It’s provided as a standalone CLI tool (with downloadable binaries or a Docker image) that you run during migration.

Key Benefits of MOLT Verify

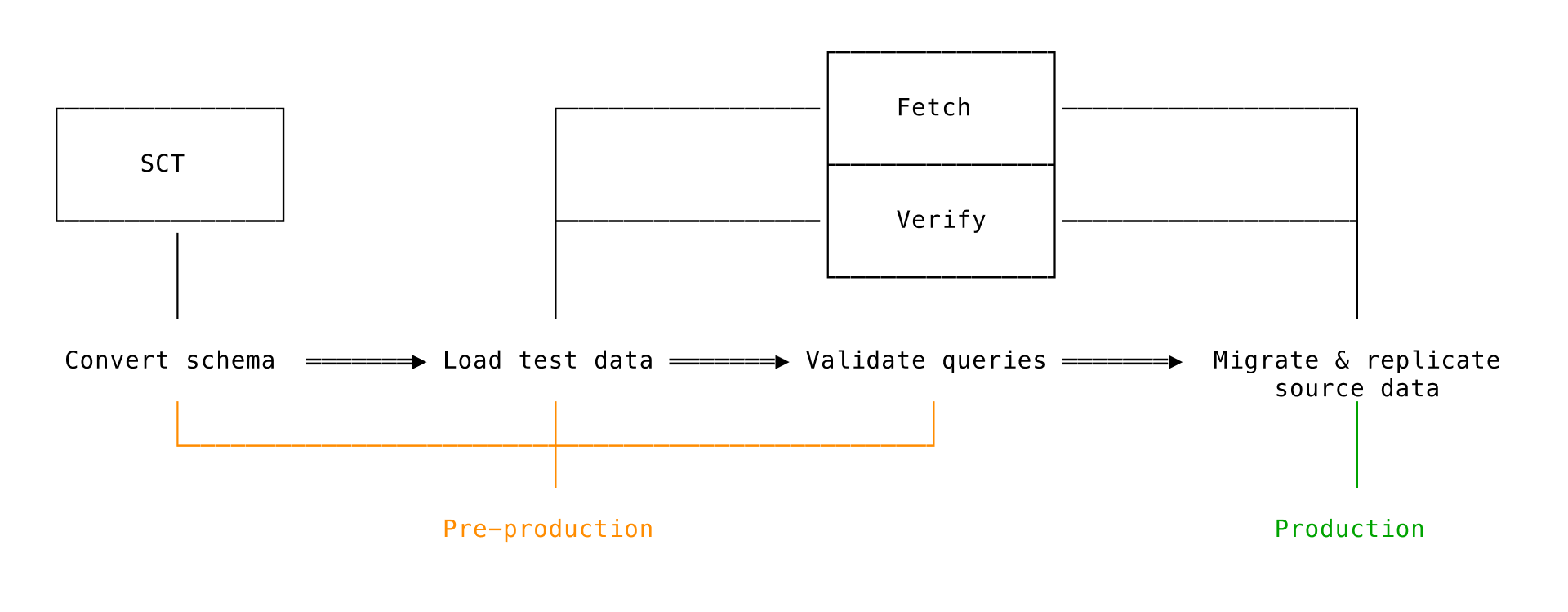

MOLT Verify acts as a safety net in the migration workflow, dramatically reduce the risk of data corruption or loss when migrating off legacy systems. After you’ve loaded data into CockroachDB (whether via bulk import or a live replication tool), running MOLT Verify lets you catch any missing records, mismatched values, or schema inconsistencies before you cut over your application.

In a typical migration plan, you would use MOLT Verify as a final validation step: for example, after all data is replicated to CockroachDB, run MOLT Verify to validate consistency, then proceed with the cutover once everything matches.

In particular, we recognized that large-scale migrations are uniquely challenging with regard to validation due to the sheer volume of data, and manual validation is virtually impossible in such scenarios. MOLT Verify, with its automated verification layer, checks every record in every row in every table, ensuring that the migration is accurate before production cutover.

The benefits of MOLT Verify include:

Cutover without fear of losing or corrupting data

Faster automated data validation checks

Validation beyond row counts: schema consistency, data integrity, row-level discrepancies

Designed for large-scale distributed migrations, validate billions of records systematically and efficiently

Architecture: How MOLT Verify Integrates with CockroachDB and Source Databases

Under the hood, MOLT Verify is built as a standalone tool that connects to both the source and target databases and orchestrates a comparison between them. This design means it can work with heterogeneous systems and will impose minimal load on the source and target clusters, beyond standard SQL reads. Ultimately, how you choose to handle concurrency will affect the load placed on your clusters.

Key points about MOLT Verify’s architecture and integration:

Standard Database Connections: MOLT Verify uses native connection protocols for each database. For example, it connects to CockroachDB using the PostgreSQL wire protocol (since Cockroach speaks Postgres-compatible SQL), and connects to MySQL through the MySQL protocol. This means it doesn’t require any special plugins on the databases; if you can connect via a SQL client, MOLT Verify can connect too.

Multi-Database Support via Abstraction: The tool is designed with an abstraction layer to handle different database flavors. It knows how to retrieve metadata and data from each supported source. It treats the source as the “truth” and CockroachDB as the destination to verify.

Schema Mapping and Assumptions: Because different databases have different ways to organize schemas, MOLT Verify makes some assumptions to simplify the comparison. For example, when comparing MySQL to CockroachDB, it assumes you’re comparing one MySQL database schema to a CockroachDB database’s public schema. MySQL’s notion of a database is analogous to a schema or namespace in Postgres/Cockroach. So if you have multiple schemas or databases to migrate, you would run MOLT Verify separately for each logical schema. This one-to-one mapping ensures the tool knows which tables to line up between source and target.

External Binary with CockroachDB Integration: Cockroach Labs distributes MOLT Verify as part of the MOLT CLI. The CLI package includes a

moltcommand (and a helper binary calledreplicatorused for live replication). You can download it for Linux, Mac, or Windows, or run it via a Docker container.Parallel and Distributed Processing: Architecturally, MOLT Verify employs a multi-threaded design to handle large schemas efficiently. This is critical for integration with CockroachDB, which is a distributed database – MOLT Verify can generate multiple concurrent read requests that CockroachDB will handle on its distributed nodes, thereby speeding up the scan of large tables. Similarly, for a source like Postgres or MySQL, multiple threads can pull data from different tables (or different parts of the same table) concurrently, thus reducing overall runtime.

In summary, the design allows MOLT Verify to slot into migration workflows without requiring special infrastructure – if you can run the binary and connect to your databases, you can use MOLT Verify.

Verification Mechanism: How MOLT Verify Ensures Data Consistency

MOLT Verify’s core responsibility is to ensure data consistency and correctness during migration. It does so by performing a thorough comparison at multiple levels of the database, flagging any discrepancies. Let’s break down the verification mechanism in detail.

Schema and Table Verification

The first thing MOLT Verify checks is that the schema has been migrated correctly. It retrieves the list of tables from the source and the target, and verifies that every expected table exists on CockroachDB with the same schema structure. This includes comparing table names and primary keys, and then drilling down into columns. For each table, it checks all columns to ensure the column names, data types, and attributes (NULL/defaults, constraints, etc.) match between source and target. If, for example, a column in MySQL was an INT but the target schema accidentally used a STRING, or if a NOT NULL constraint was dropped, MOLT Verify will catch those discrepancies.

Row Data Verification

After confirming the schemas align, MOLT Verify moves on to the data itself. This is the most intensive part: it compares the contents of each table row-by-row between the source and CockroachDB. Under the hood, MOLT Verify will typically perform an ordered scan of the table on each database (often using the primary key order to have a consistent traversal) and then compare the rows as it goes. Essentially, it performs a synchronized read from source and target and checks that for every primary key (or unique identifier) present in the source, the target has a row with identical values.

MOLT Verify will report summary counts of matched vs mismatched data for each table. For example, after running, you might see an output (in JSON or log format) per table indicating how many rows were successfully verified and how many differences were found. An example summary line looks like this:

{"table_schema":"public",

"table_name":"common_table",

"num_truth_rows":6,

"num_success":3,

"num_missing":2,

"num_mismatch":1,

"num_extraneous":2,

...

"message":"finished row verification"}In this example, MOLT Verify is reporting that out of 6 rows in the source (“truth”) table, 3 rows matched exactly, 2 rows were missing on the target, 1 row had mismatched data, and 2 extraneous rows were found on the target. Similar statistics are output for each table.

This row-by-row comparison mechanism is essentially performing a massive diff between the two databases. Importantly, MOLT Verify does this in a streaming and memory-efficient way. It processes a chunk of rows at a time (by default 20,000 rows per batch) rather than loading an entire table into memory. It reads a batch from the source and the corresponding batch from the target, compares them, then moves on.

Handling Live Data Changes

In a migration scenario, you might be running MOLT Verify while changes are still trickling into the source (and being replicated to the target). If the source database is still “live,” it’s possible a row could change between the time you read it from the source and the time you read it from the target (or between batches). MOLT Verify accounts for this with the --live flag. When you run with the --live flag, the tool will automatically retry verification on any rows that didn’t match before declaring them as errors. This is useful during continuous replication or minimal-downtime migrations – it prevents false alarms due to timing lags. If after a retry the row still doesn’t match, then it’s a true mismatch. The output even tracks a num_live_retry count to show how many mismatches were fixed by a later retry (so you know how much data was in flux).

There is also a --continuous mode, which tells MOLT Verify to run in a loop continuously – essentially, once it finishes verifying all tables, it will start over until you stop it. This could be used to constantly monitor data consistency during a long-running migration, giving you up-to-date information on whether the target is in sync.

Get all the details in our documentation.

Check out MOLT Verify in action as technical evangelist, Rob Reid validates the migration from his PostgreSQL database to CockroachDB:

Best Practices: Tips for Using MOLT Verify Effectively

To get the most out of MOLT Verify and ensure a smooth migration, consider the following best practices and recommendations:

Run Verification at Key Points in the Migration

Don’t wait until the final switch to run MOLT Verify for the first time. It’s wise to use it in earlier stages as well. For example, after an initial test data load, run MOLT Verify on a subset of data to catch any glaring schema or data issues. During iterative testing (the “validation” phase of migration), use it on smaller samples. By the time you do the full migration, you’ll be familiar with the tool and have confidence that it will likely report zero issues.

Always run a final verification on the full dataset right before cutover (or as part of the cutover) – this is your final gate to ensure consistency. If using continuous replication, keep MOLT Verify running with --continuous so you have up-to-date info, and then do one last run in non-continuous mode when replication is stopped.

Minimize Changes for the Final Verification

While MOLT Verify can work with live data, you will get the cleanest results if the source is static or nearly static during the verification. Try to schedule the verification during a read-only window on the source if possible. Many teams will stop application writes, let the replication catch up, then run MOLT Verify on a static snapshot of data. This avoids the complexity of transient mismatches. If you must verify while changes are happening, definitely use --live to filter out false mismatches, and possibly run multiple passes (--continuous) until the mismatches go to zero.

Tune Concurrency and Batching for Your Environment:

You can adjust --concurrency, --concurrency-per-table, and --row-batch-size to optimize runtime. The --concurrency flag toggles how many tables to verify at a time, and then --concurrency-per-table allows you to adjust how many threads should run per table. Start with defaults, and if you find verification is taking too long, consider increasing concurrency. If you do raise concurrency, monitor the source database – you don’t want to accidentally overload production with read queries. It might be useful to test on a staging environment to gauge how much concurrency the source can handle.

If the network is a bottleneck, see if larger batches improve throughput. Conversely, if you run into memory constraints on the MOLT Verify side (or if either database starts to see issues like long transaction times), you might lower the batch size to reduce the footprint. The goal is to make verification as fast as possible without jeopardizing the stability of your systems.

MOLT Verify also offers --schema-filter and --table-filter options (accepting regex patterns) to limit what it checks. This is useful for phased migrations. For instance, if you are migrating in waves, you could verify one schema at a time by filtering. Or if one giant table dominates the runtime, you might isolate it in a separate run.

RELATED

Check out our tutorials on how to migrate from PostgreSQL or MySQL to CockroachDB.

MOLT to CockroachDB with Confidence

Ensuring data integrity during migrations is crucial for any organization. MOLT Verify provides an automated, comprehensive validation process that gives you the confidence to complete your migration swiftly and without the fear of data corruption. Remove guesswork from your migrations with MOLT Verify, validating every record systematically before production.

To recap best practices: use the tool early and often, verify in as controlled an environment as possible, pay attention to its output, and address any problems it uncovers. Migrations are like a high-stakes endeavor, but with tools like MOLT Verify, you have a stethoscope to check the health of your data before declaring the operation a success.

Get started with CockroachDB Cloud today. We’re offering $400 of free credits to help kickstart your CockroachDB journey, or get in touch today to learn more.