This article is the latest in “Mainframe to Distributed SQL”, an original series from Cockroach Labs. Please visit here for the previous installments:

Transitioning from legacy mainframe systems to distributed databases marks a monumental shift for any organization.

While the promise of scalability, cost-efficiency, and enhanced performance is compelling, the migration process itself presents significant challenges. Managing change and mitigating risks are critical components of ensuring a seamless transition. Without proper planning, the migration could lead to data integrity issues, prolonged downtime, and resistance from stakeholders.

This article, the seventh and final in Cockroach Labs’ “Mainframe to Distributed SQL Series”, explores key strategies for managing mainframe migration’s complexities, from addressing organizational resistance to ensuring technical compatibility. It also delves into risk mitigation techniques such as phased migration approaches, robust testing frameworks, and data validation methods, all of which minimize disruption and maximize the benefits of distributed database adoption.

Whether you're in the early stages of migration planning or in the thick of navigating your transition, this article offers actionable insights to help you manage change and mitigate risks effectively.

Change management strategies: Navigating organizational and cultural shifts

Transitioning from mainframe systems to distributed database architectures requires more than technical adjustments — it involves significant organizational and cultural changes. These shifts can impact workflows, roles, and the broader organizational mindset.

A robust change management strategy is critical to ensuring that employees adapt to the new systems, processes remain efficient, and the migration delivers its intended value. Following are key strategies for developing an effective change management plan:

1. Build a Clear Vision and Communicate Early: It is crucial for employees to understand the migration’s purpose and how it aligns with the organization’s goals.

Developing a compelling narrative that explains the “why” behind the transition — such as improved scalability, cost-efficiency, or future-proofing — helps create clarity and engagement. Clearly articulating the benefits for employees, including enhanced tools, reduced workloads, or opportunities for skill development, fosters a sense of inclusion and purpose. Early and consistent communication through meetings, emails, and internal platforms is essential to reduce uncertainty, build trust, and ensure alignment throughout the migration process.

2. Engage Stakeholders at Every Level: Gaining buy-in from leadership and employees is necessary for ensuring smoother adoption of a migration effort and mitigating pushback.

Identifying key stakeholders, such as executives, team leads, and technical specialists, allows organizations to build a strong group of migration champions. Involving these stakeholders in decision-making processes, including tool selection, workflow redesigns, and timeline planning, fosters a sense of ownership and alignment. Establishing cross-functional committees further ensures that the migration strategy aligns with business needs and operational realities – this promotes collaboration and reduces potential roadblocks.

3. Provide Comprehensive Training and Upskilling: Transitioning to distributed architectures often requires employees to adopt new tools, processes, and skillsets, making training and development critical to success.

Offering tailored training programs for specific roles, such as database administrators, developers, and operations teams, ensures that employees are equipped with the knowledge they need. Hands-on workshops, e-learning platforms, and certification programs can help to effectively build technical proficiency. By highlighting how these new skills align with career growth opportunities, organizations can boost their employees’ motivation and engagement, which fosters a more committed and skilled workforce.

4. Redesign Workflows and Processes: Distributed database architectures introduce new processes for data management, system monitoring, and troubleshooting, making it essential to adapt workflows accordingly.

Conducting process mapping sessions helps identify how workflows will change and ensures the new processes are clearly documented. Aligning these workflows with the capabilities of distributed database systems, such as horizontal scaling, replication, and fault tolerance, maximizes their efficiency and effectiveness. Piloting new workflows with smaller teams before scaling them organization-wide allows for iterative improvements and smoother adoption.

5. Foster a Culture of Adaptability: A shift to distributed systems often disrupts established routines and cultural norms, requiring a mindset shift toward flexibility and innovation.

Encouraging open dialogue about the migration fosters transparency and creates safe spaces for employees to voice concerns and share feedback. Recognizing and rewarding teams and individuals who embrace the changes and contribute to the migration's success reinforces positive behavior. Enterprises can promote a growth mindset by positioning the migration as an opportunity for organizational evolution – this helps employees see the value in adapting, which fosters resilience and collaboration in a competitive environment.

6. Monitor Progress and Adapt: What is change management? Change management is an ongoing process that demands regular assessment and adjustment to ensure its effectiveness.

Using surveys, focus groups, and feedback sessions helps gauge employee sentiment and identify areas of resistance or confusion. Monitoring adoption metrics, such as tool usage rates, training completion, and error reports, provides valuable insights into the transition’s progress. Proactively addressing challenges and updating the organizational change management plan based on real-time feedback ensures that the process remains aligned with enterprise goals and employee needs.

Risk mitigation and contingency planning: Proactively addressing migration challenges

Migrating from mainframe systems to distributed database architectures is a complex process that involves numerous technical, operational, and organizational risks. Without careful planning and proactive measures, these risks can lead to data loss, downtime, or project delays.

Developing a comprehensive risk mitigation and contingency planning strategy is essential to ensure a smooth and successful migration. This section outlines key risks, strategies to mitigate them, and contingency planning best practices.

Identifying key risks in database migration

What is risk mitigation? Understanding potential risks is essential for planning a successful database migration and addressing challenges effectively with a sound risk mitigation plan. Migration projects, particularly those involving legacy systems transitioning to modern distributed architectures, are susceptible to several common risks:

1. Data Integrity Issues: Errors during data migration can result in inconsistencies between the source and target systems, impacting functionality and decision-making. Ensuring data accuracy requires rigorous testing and validation processes at every migration stage.

2. Downtime and Service Interruptions: Unplanned outages during migration can disrupt business operations and negatively impact user experience. Minimizing downtime involves careful scheduling, robust backup plans, and adopting strategies such as online or phased migrations to maintain service continuity.

3. Technical Compatibility Challenges: Legacy systems often have unique configurations or dependencies that may be difficult to replicate in modern distributed environments. Compatibility issues can be addressed by conducting comprehensive system assessments and leveraging tools that support seamless integration.

4. Resistance to Change: Organizational resistance, whether from leadership or employees, can slow adoption and create roadblocks. As described earlier, effective communication, training, and stakeholder involvement are crucial to fostering buy-in and easing the transition.

5. Timeline and Budget Overruns: Unforeseen technical challenges or scope changes can lead to extended timelines and increased costs. Detailed planning, clear milestones, and contingency budgeting help mitigate these risks and keep projects on track.

6. Security Vulnerabilities: Data in transit or within the target environment is susceptible to breaches and compliance risks during migration. Employing encryption, secure transfer protocols, and access controls is vital to protecting sensitive information and ensuring regulatory compliance.

Strategies for risk mitigation in database migration

To address the risks associated with database migration, organizations can implement several proactive measures to ensure data integrity, minimize disruptions, and build trust among stakeholders.

First, comprehensive assessment and planning are critical first steps in mitigating migration risks. Organizations should conduct a thorough evaluation of their existing systems, analyzing data structures, integrations, and dependencies. This process helps identify potential conflicts, such as unsupported features or complex workflows, and allows teams to create detailed resolution plans, ensuring a smooth transition.

It’s worth repeating that training and communication are essential for fostering collaboration and trust during the migration process. Comprehensive training programs for technical teams on distributed database technologies ensure they are equipped to manage new systems effectively. Simultaneously, open communication with stakeholders helps address concerns, provide updates, and build confidence in the project’s success.

In this technical part, data validation and testing play a vital role in maintaining consistency and reliability throughout the migration process. Automated tools, such as Cockroach Labs’ MOLT Verify, can be used to validate data before, during, and after migration, quickly detecting and resolving discrepancies. A parallel testing phase, where both legacy and distributed systems operate simultaneously, allows teams to compare outcomes and verify that the new system performs as expected.

Adopting a phased migration approach can help reduce the risks of widespread disruptions. Incremental methods, such as the Strangler Fig Pattern, allow components to be migrated and tested in manageable stages. By limiting the scope of each phase, teams can identify and address issues early, which helps to avoid large-scale problems later on.

Finally, technical safeguards are crucial for protecting data and ensuring system reliability. Encrypting data both during transit and at rest protects sensitive information from breaches. Robust backup systems ensure quick recovery in the event of failures or data loss. Additionally, tools like change data capture (CDC) enable real-time synchronization and replication, maintaining consistency between source and target systems.

Contingency planning best practices for database migration

Even with robust risk mitigation strategies, unexpected challenges can arise during database migration. A well-prepared contingency plan ensures minimal disruption in such cases and allows organizations to respond effectively to unforeseen issues.

For instance, developing rollback procedures is a critical component of contingency planning: Organizations should establish a clear plan to revert to the legacy system if critical issues occur during migration. Regularly testing these rollback procedures ensures they can be executed effectively under pressure, which minimizes downtime and data loss.

Also, preparing disaster recovery plans is essential for handling scenarios such as system outages, data loss, or security breaches. Detailed recovery protocols should be in place, and regular disaster recovery drills should be conducted to test the organization’s readiness to respond to such events.

Additionally, building redundancies into the migration process enhances continuity and reliability. Failover systems and redundant databases can keep operations running smoothly, even during unexpected disruptions. Dual-write techniques, where data is synchronized between legacy and distributed databases, provide additional assurance until the migration is fully complete.

Overall, real-time monitoring and rapid response are vital for addressing issues as they arise. Monitoring tools like Prometheus or Datadog can track system performance and detect anomalies early. Establishing a rapid response team ensures that any problems are dealt with immediately, reducing the risk of extended downtime or data integrity issues.

Performance monitoring and optimization: Ensuring stability post-migration

Successfully migrating from a mainframe to a distributed database architecture is only the beginning. The true test of a migration’s success lies in how well the new system performs and remains stable over time. Distributed databases offer many benefits, including scalability and fault tolerance, but they also require proactive monitoring and optimization to maintain their performance.

Distributed databases are complex, often spanning multiple nodes, regions, and workloads. Establishing a robust monitoring framework and continuous optimization practices is critical for ensuring that the system operates efficiently and meets evolving business demands. Steps that organizations can take include:

Regularly track query execution times to identify and optimize slow-running queries, ensuring faster data retrieval

Keep an eye on resource utilization by monitoring CPU, memory, disk I/O, and network bandwidth to prevent potential bottlenecks that could degrade performance

Verify replication health across nodes to ensure data consistency and prevent synchronization issues

Measure read and write latency to detect and address delays in data processing promptly

Monitor error logs and transaction failure rates to troubleshoot and resolve issues effectively, to minimize disruptions and maintain smooth operations

You can use different tools like Prometheus, or Datadog to keep an eye on your database performance. If you’re working with the distributed SQl database CockroachDB, you can simply use its built-in monitoring tools:

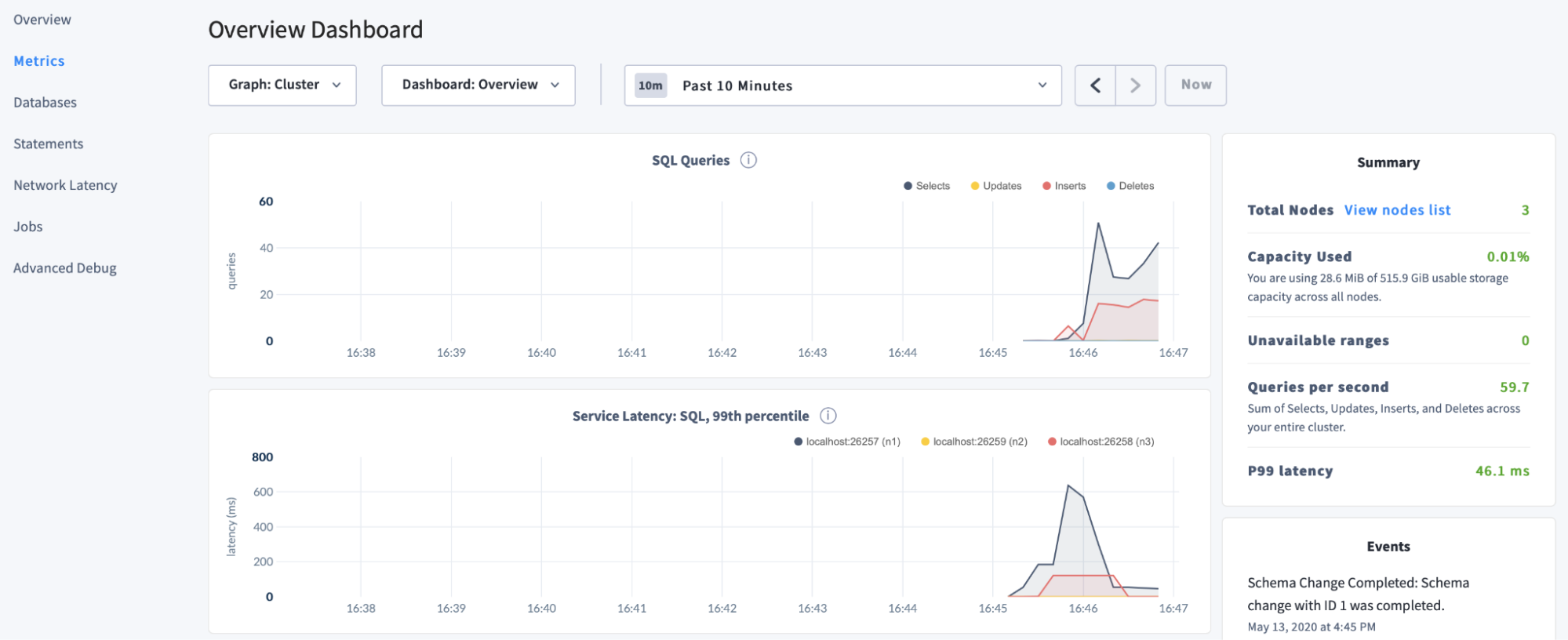

DB Console: The DB Console collects time-series cluster metrics and displays basic information about a cluster's health, such as node status, number of unavailable ranges, and queries per second and service latency across the cluster. This tool is designed to help you optimize cluster performance and troubleshoot issues. The DB Console is accessible from every node at http://<host>:<http-port>, or http://<host>:8080 by default.

Metrics dashboards: The Metrics dashboards, which are located within Metrics in DB Console, provide information about a cluster's performance, load, and resource utilization. The Metrics dashboards are built using time-series metrics collected from the cluster. By default, metrics are collected every 10 minutes and stored within the cluster, and data is retained at 10-second granularity for 10 days, and at 30-minute granularity for 90 days.

SQL Activity pages: The SQL Activity pages, which are located within SQL Activity in DB Console, provide information about SQL statements, transactions, and sessions.

Cluster API: The Cluster API is a REST API that runs in the cluster and provides much of the same information about your cluster and nodes as is available from the DB Console or the Prometheus endpoint, and is accessible from each node at the same address and port as the DB Console.

Proactive alerts play a critical role in ensuring a rapid response to potential problems, helping to prevent cascading failures and extended downtime. Setting up thresholds for critical metrics, such as latency, disk space usage, and error rates, allows for real-time detection of anomalies. Automated alerts delivered through email, SMS, or monitoring dashboards notify relevant teams immediately, enabling swift corrective action. Meanwhile, advanced systems can leverage machine learning-powered anomaly detection to identify unusual patterns, providing early warnings for issues that might otherwise go unnoticed.

Regular health checks are vital for identifying risks and maintaining the overall health of the database environment. Key health check activities include:

Load Testing: Simulating peak loads helps evaluate how the system performs under stress, ensuring it can handle real-world traffic spikes without failure.

Data Integrity Verification: Validation scripts can be used to ensure data consistency across nodes, identifying and resolving discrepancies before they affect operations.

Security Audits: Periodically reviewing access controls, encryption policies, and security patches prevents vulnerabilities and ensures the system meets compliance standards.

Naturally, a culture of continuous improvement is essential for maintaining the efficiency and adaptability of distributed database environments. These systems are dynamic, and ongoing refinement is necessary to accommodate changing workloads, business needs, and technological advancements.

Regular performance reviews should be scheduled to evaluate the system’s health and identify areas for optimization. These reviews can uncover inefficiencies, such as slow queries or underperforming nodes, allowing teams to implement timely improvements. Additionally, gathering feedback from developers and users provides valuable insights into how queries and workflows can be optimized to enhance system usability and performance.

Staying updated on database updates and best practices is critical for leveraging new features and enhancements. Modern databases frequently introduce improvements in performance, security, and functionality, which can significantly benefit overall system capabilities when applied effectively.

A transformative journey

Migrating from legacy systems to distributed database architectures is a transformative journey that promises scalability, cost-efficiency, and enhanced performance. However, this transition is not without challenges. Effective change management, comprehensive risk mitigation, and robust contingency planning are a must to navigate the complexities of migration and ensure a smooth process.

Key strategies, such as phased migration approaches, rigorous testing frameworks, and proactive monitoring, enable organizations to address technical, operational, and organizational risks effectively. Training and communication foster collaboration and buy-in from stakeholders, while technical safeguards, such as data encryption and real-time synchronization, guard against vulnerabilities.

Once migration is complete, the focus shifts to maintaining the stability and efficiency of the new distributed systems. Proactive monitoring, regular health checks, and a culture of continuous improvement help organizations adapt to evolving business demands and best leverage advancements in database technologies.

Ultimately, a well-executed database migration is not just a technical achievement but a strategic investment in an organization’s future. By following best practices and lessons learned from successful case studies, organizations can position themselves for long-term success in a dynamic and competitive landscape.

Confidently plan your path to database modernization with The Architect's Guide to SQL Database Modernization: Your Step-by-Step Roadmap.

Amine El Kouhen, Ph.D., is Sr. Partner Solutions Architect at Cockroach Labs