We are excited to introduce Logical Data Replication (LDR) in Preview in the 24.3 release of CockroachDB. LDR enables data replication between CockroachDB clusters and enables both clusters to actively receive traffic during replication.

Our customers choose CockroachDB as their database for our differentiators around scale, resiliency, and consistency. CockroachDB's Raft replication is designed to provide strong consistency and high availability within a cluster by replicating data across multiple nodes within a cluster. When these nodes are deployed in 3 or more regions, this creates a multi-region cluster and enables the cluster to achieve regional survivability and survive node, availability zone (AZ), and even region outages with minimal data loss or downtime.

Though Raft is powerful, some customers still face constraints given their current application architectures:

Two data-center (2DC) deployment limitations: Many customers want the ability to have as much fault tolerance as possible and survive the most critical disasters, including black swan events like region failures. While multi-region Cockroach clusters can survive and recover from these today, Raft requires three or more regions in order to survive a region failure, which can be a constraint for customers with only two data centers. Therefore, with traditional Raft, having two data centers or regions can prevent you from achieving region resiliency.

Application latency requirements: Raft replication can introduce latency when nodes are distributed across regions because the algorithm requires a quorum to acknowledge writes.

To address these constraints, we built physical cluster replication (GA in 24.1). Physical cluster replication enables unidirectional, transactionally consistent replication between CockroachDB clusters. Because PCR supports a primary-standby system between CockroachDB clusters, PCR is a good choice for disaster recovery, particularly for 2DC customers that want to survive a region or data center outage with low RPO and RTO.

However, while PCR met the needs of some of our 2DC customers, there remained a gap: 2DC customers that wanted both clusters to be active and receive traffic. Many of these customers had geo-partitioned their application logic across two regions, and weren’t ready to do an application rewrite for three regions. Other customers wanted to eventually add a third region, or even another cloud for maximum survivability, but had currently invested in two on-premise data-centers, and weren’t ready to add a third just yet.

On top of that, we saw a desire to move data between CockroachDB clusters. Moving data between clusters could support a number of use cases, including allowing users to isolate analytical workloads from critical application traffic or seeding test clusters quickly in development environments.

Given all these considerations, LDR was built as a flexible, robust tool to help meet customers where they are, and meet some of the most custom requirements while not compromising on CockroachDB’s foundational differentiators of scalability, resiliency, and consistency.

How does LDR help users accomplish these goals?

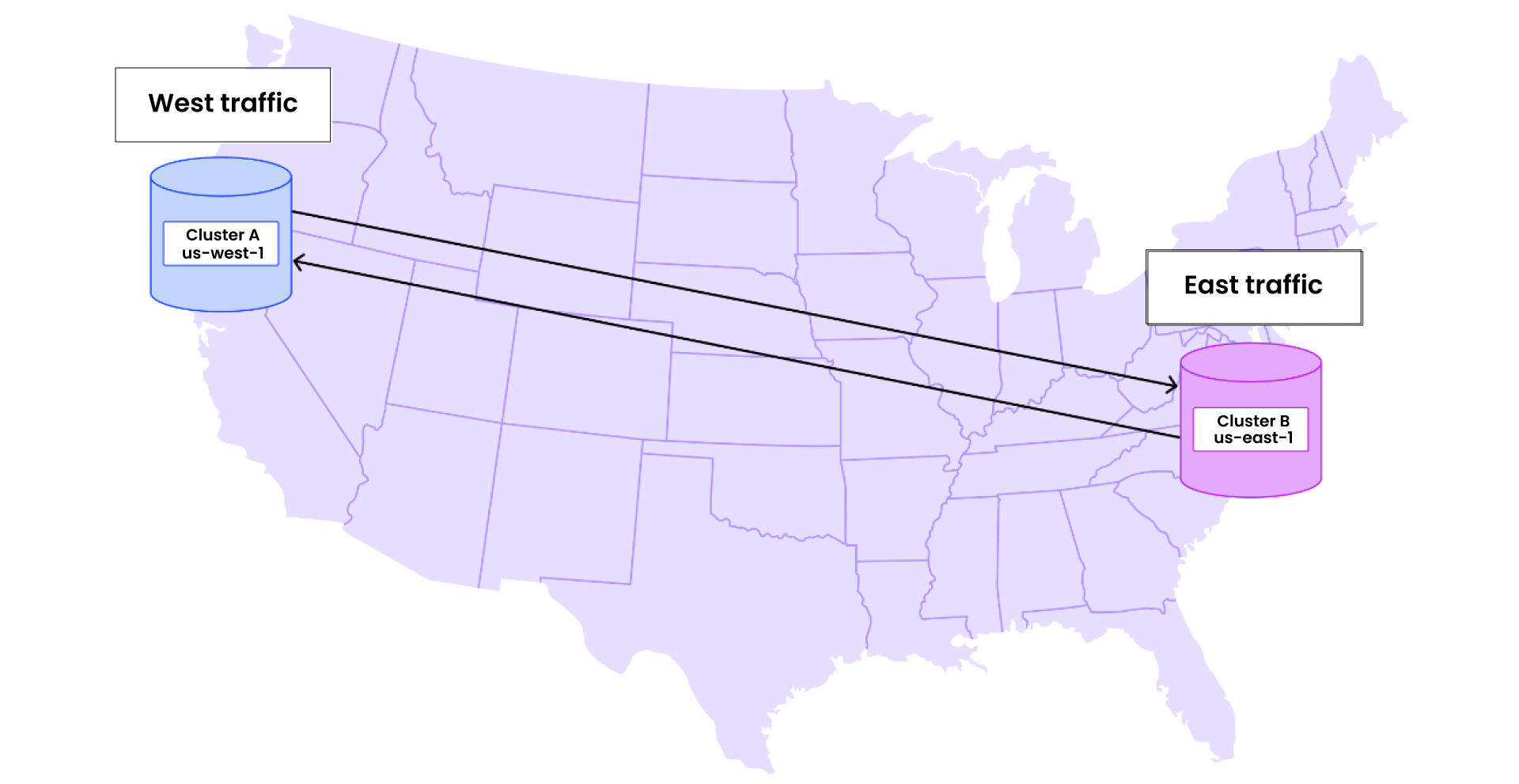

Because LDR replicates between CockroachDB clusters, users looking for high availability and low write latency could set up a system with two, single-region, CockroachDB clusters.

Consider an application that has globally distributed traffic across the United States. You could set up two single-region clusters, one in the East (i.e. us-east-1) and one in the West (i.e. us-west-1), to optimize for application traffic locality. Single-region clusters allow users to achieve lower write latency as nodes distributed across availability zones only need to reach consensus within the same region, unlike cross-region consensus, which incurs higher latency due to the inherent wide area network (WAN) latency.

However, single-region clusters alone do not provide region-level survivability with near-zero RPO and RTO. This is where LDR comes into play. LDR asynchronously replicates data in real-time between CRDB clusters. In the event of a regional outage in us-west-1, users could redirect their West Coast traffic to the East Coast cluster, providing a high-availability solution.

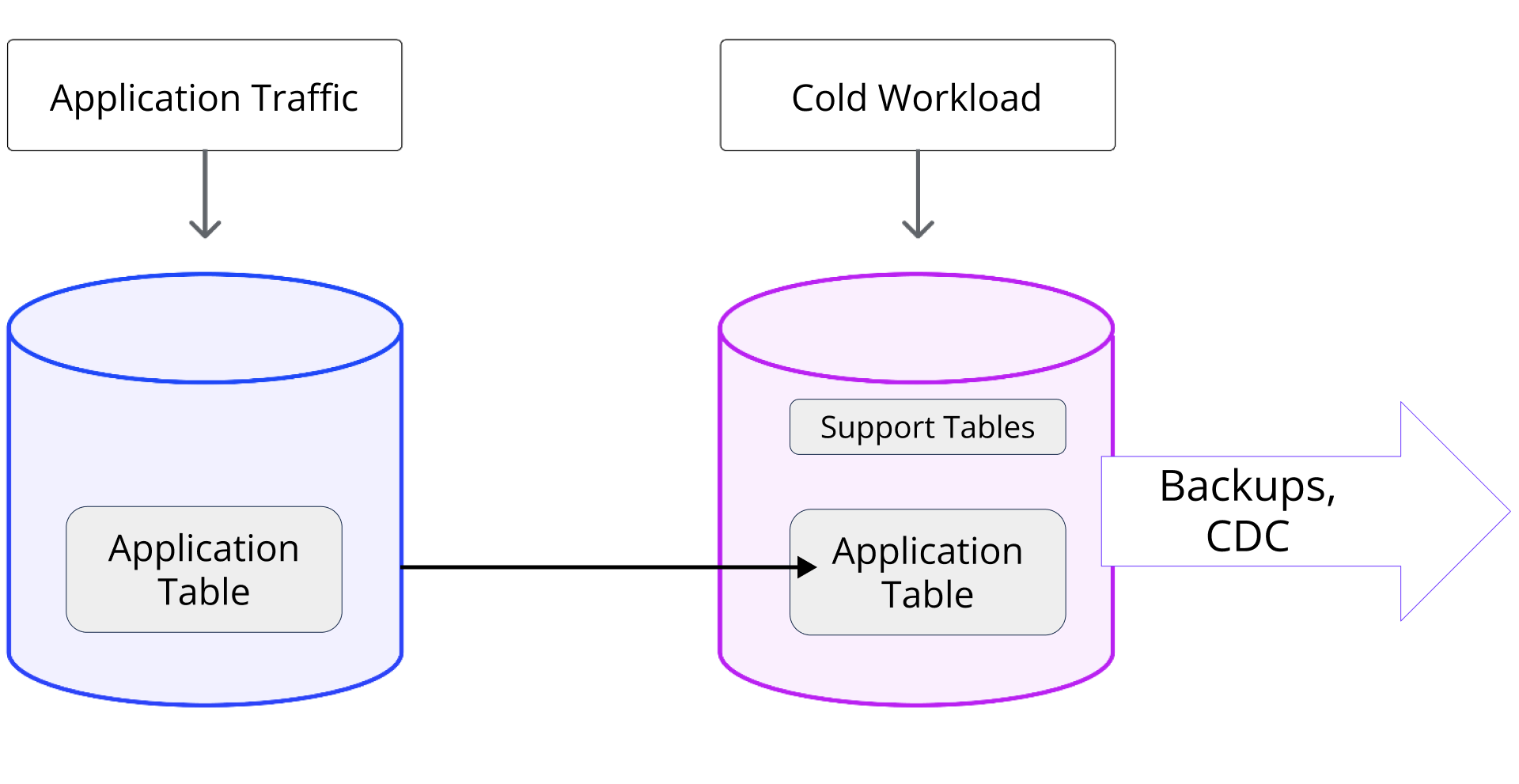

Unlike PCR, which replicates at the cluster level, LDR replicates at the table level, giving users the flexibility to choose specific table subsets within a cluster to replicate. While CockroachDB’s admission control helps maintain cluster performance and availability when some nodes experience high load, users who are particularly sensitive to application foreground latency may prefer to isolate their workloads across clusters. For instance, a "Hot" cluster could handle only the most critical application traffic, replicating into a "Cold" cluster that serves secondary operational workloads. This "Cold" cluster might include additional support tables and run backup and CDC jobs as well. Since LDR enables both clusters to be active and handle traffic simultaneously, users can use this flexibility to optimize their systems effectively across CockroachDB clusters.

How does Cockroach make LDR easy to operate?

LDR is built into the CockroachDB binary and only takes a few SQL statements to start. Because LDR is run as part of CockroachDB’s job infrastructure, users can manage their LDR streams just like they would any other long-running job. On top of that, LDR is integrated with CockroachDB’s elastic admission control, so that primary application traffic is not impacted while LDR replicates your data in the background.

Monitoring LDR is seamless – you can monitor and alert on your LDR streams using your observability tool of choice, whether it’s the DB Console, Prometheus, or other third-party monitoring tools.

Finally, we understand how complex bidirectional replication can become. In LDR, all data looping is managed natively, so if you set up two replication streams for bidirectional replication, LDR will prevent infinite looping between clusters. You might also be wondering about conflict resolution — if an application writes to both clusters or performs an UPDATE, LDR uses “last write wins” conflict resolution, relying on the MVCC timestamp to determine the winning write.

Getting started with LDR in CockroachDB

Between LDR, PCR, and Raft, Cockroach has options to help you meet your most stringent and custom requirements around application deployments, hardware limitations, and resiliency. Check out the video below for a demo by our technical evangelist Rob Reid:

You can read more in our docs, and we recently published a blog on cross-cluster replication. Get started with CockroachDB today: https://cockroachlabs.cloud/signup.