Change data capture (CDC) is the backbone of modern data platforms: Event-driven microservices, real-time analytics, incremental ELT, and operational alerts all depend on a reliable stream of change events.

CockroachDB has long supported CDC with native changefeeds and familiar output formats like JSON and Avro. Now we’re adding Protocol Buffers (Protobuf) as a first‑class serialization option. If your organization standardizes on Protobuf for Kafka and downstream services, you’ll be able to plug CockroachDB in without glue code or format converters.

This post explains why we added Protobuf support, what’s included, how to use it, and a few things to watch out for.

Why Protobuf for changefeeds?

Many engineering orgs have converged on Protobuf as their typed, compact, language‑neutral contract, especially in Kafka‑centric architectures. Today, teams using CockroachDB often translate CDC events (JSON/Avro) into Protobuf to fit the rest of their stack. That adds latency, cost, and operational complexity.

Native Protobuf changefeeds reduce that friction. You get smaller messages than JSON, schema‑backed types, and an easy path to integrate with existing Protobuf consumers.

What’s included

Format options

Works with existing knobs you already know:

updated,mvcc_timestamp,before,diff,key_in_value,topic_in_value,split_column_families,virtual_columns, andresolved.The

headers_json_column_nameoption is not supported with Protobuf; otherwise, inherited options likeupdated,mvcc_timestamp,diff,key_in_value,topic_in_value,split_column_families,virtual_columns, andresolvedwork as they do for other formats.

Schema delivery

We’ll make available an Apache‑licensed .proto definition for the changefeed message structures in a public repository you can import into your applications. This ensures producers and consumers share a single, versioned contract.

RELATED

Register for "Powering Real-Time AI with ClickHouse and CockroachDB." Catch the live webinar on Tuesday, December 9, 2025 at 10am PST/1pm EST. Learn how change data capture (CDC) pipelines power seamless data sync between CockroachDB and ClickHouse and much more!

Quick start: Emit Protobuf from a changefeed

Create a Protobuf changefeed to Kafka with a bare envelope:

CREATE CHANGEFEED FOR TABLE app.public.users

INTO 'kafka://broker-1:9092'

WITH format = 'protobuf', envelope = 'bare';Include resolved timestamps and a wrapped envelope for metadata:

CREATE CHANGEFEED FOR TABLE app.public.users

INTO 'kafka://broker-1:9092'

WITH format = 'protobuf', envelope = 'wrapped', resolved = '10s', mvcc_timestamp;Message shape (at a glance)

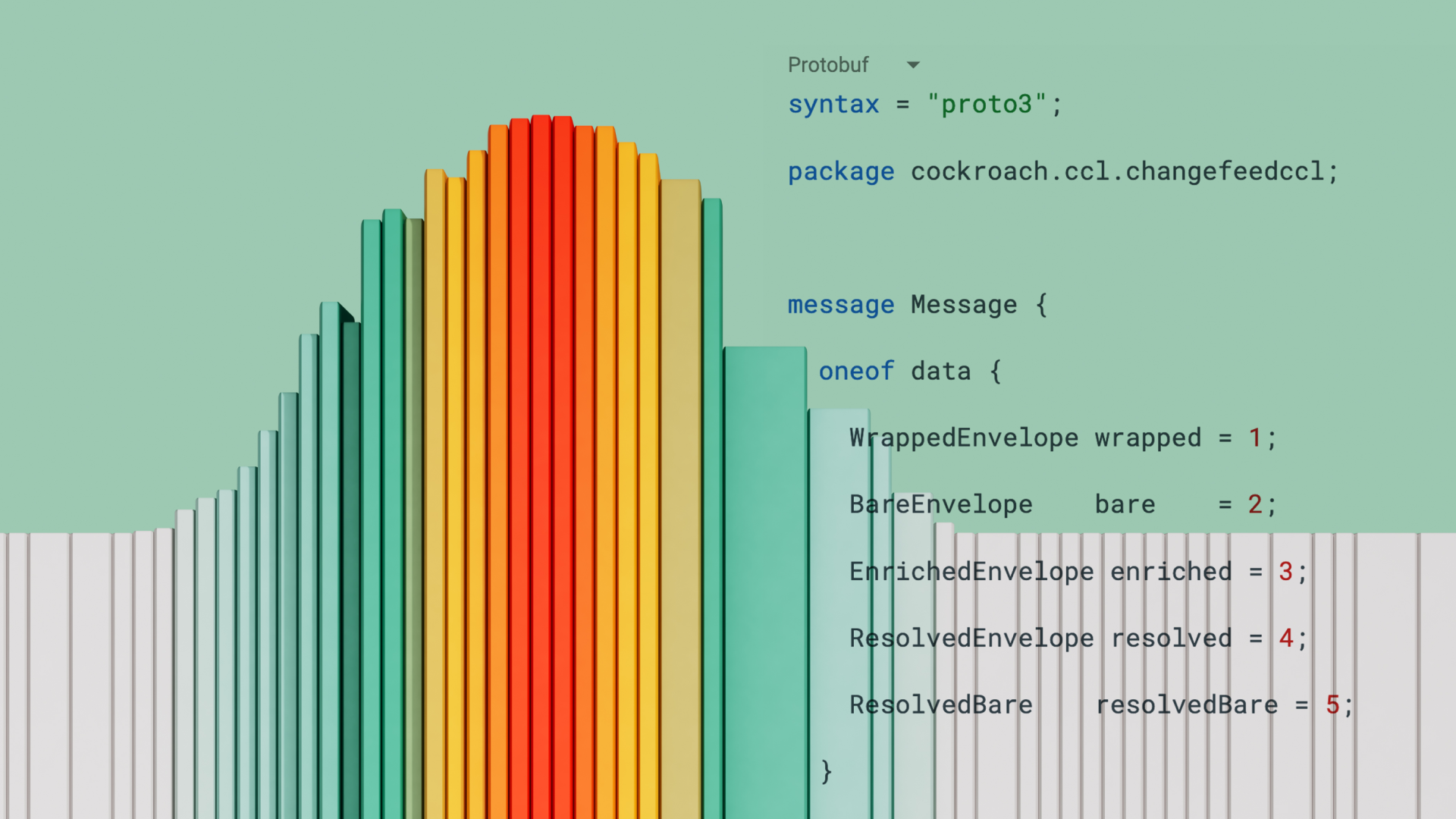

The .proto model defines a common Message wrapper with variants for each envelope type. Here’s a trimmed view (names may evolve slightly):

syntax = "proto3";

package cockroach.ccl.changefeedccl;

message Message {

oneof data {

WrappedEnvelope wrapped = 1;

BareEnvelope bare = 2;

EnrichedEnvelope enriched = 3;

ResolvedEnvelope resolved = 4;

ResolvedBare resolvedBare = 5;

}

}

message BareEnvelope {

map<string, Value> values = 1; // column name -> typed value

Metadata __crdb__ = 2; // optional metadata (when enabled)

}

message WrappedEnvelope {

Record after = 1;

Record before = 2; // optional when BEFORE enabled via the 'diff' flag

string updated = 4;

string mvcc_timestamp = 5;

Key key = 6;

string topic = 7;

}

message Record { map<string, Value> values = 1; }

message Key { repeated Value key = 1; } // compound keys supported

message Value {

oneof value {

string string_value = 1;

bytes bytes_value = 2;

int32 int32_value = 3; int64 int64_value = 4;

float float_value = 5; double double_value = 6;

bool bool_value = 7;

google.protobuf.Timestamp timestamp_value = 8;

Array array_value = 9;

Record tuple_value = 10;

Decimal decimal_value = 11;

string date_value = 12;

string interval_value = 13;

string time_value = 14;

string uuid_value = 15;

}

}An enriched envelope adds operation type (OP_CREATE, OP_UPDATE, OP_DELETE), event timestamps, and a source block (cluster/table context) to match Debezium‑style consumers.

Envelope guidance

Bareis the simplest and most compact; great for internal services that don’t need before‑images.Wrappedadds the operational metadata most teams want in production: change time, MVCC timestamp, topic, and key.Enriched(roadmap) mirrors Debezium semantics for teams with existing consumers expectingop,source, andts.(*to be supported)

Key‑onlyis designed for workflows that just need the key stream (e.g., cache invalidation).

Important caveat: Protobuf bytes aren’t canonical

If you rely on the serialized bytes to be stable (e.g., using the message as a key or hashing the exact payload), Protobuf can surprise you. Deterministic encoding options exist in some runtimes, but they are not guaranteed across languages or versions. The safest approach is to avoid treating Protobuf byte streams as canonical identifiers, prefer explicit key fields, or if you must key off bytes, use a format designed for canonical serialization such as JSON.

We plan to document best practices and, in the future, explore separate key-value encoders so you can choose a different encoding for keys when stability is critical.

Who this is for

Teams standardizing on Protobuf in Kafka, who want to drop CockroachDB changefeeds right into existing pipelines.

Platform groups looking to eliminate JSON to Protobuf transformation services and reduce operational surface area.

Organizations migrating to CockroachDB from systems where Protobuf is pervasive and want minimal downstream change.

Try it out

Spin up a test cluster, point a changefeed at Kafka or cloud storage, and set WITH format='protobuf'. From there, import the changefeedpb.proto file into your consumer and start decoding messages natively.

If your team is moving to CockroachDB and already runs on Protobuf, we’d love to help you test this and shape the remaining envelopes. Reach out and we’ll make sure you get what you need.