In Back to the Future, messing with the wrong wires could change the timeline. In cloud infrastructure, messing with the wrong permissions can do just as much damage. That’s why Role-Based Access Control (RBAC) is a cornerstone of secure, scalable platform design.

What is RBAC? It’s a structured way to manage access by assigning roles instead of granting individual permissions. When applied across multiple clouds and Kubernetes clusters, this approach ensures engineers get just the access they need — no more, no less.

In Kubernetes, RBAC is enforced at both the cloud provider level and within the cluster itself, via Identity and Access management (IAM) policies, ClusterRoles, and ClusterRoleBindings. This layered model lets platform teams maintain tight control over access while still enabling developers and SREs to get work done efficiently. The trick is aligning these layers in a way that’s secure, scalable, and doesn’t require jumping through flaming hoops every time someone needs temporary admin creds.

CockroachDB Cloud leverages AKS, EKS, and GKE to run customer workloads. To make our production cloud projects more secure and to prevent accidents on these clusters, no lone engineer – including members of the Cockroach Labs SRE and Platform teams – have default Admin access.

Instead, they are granted read-only type credentials, both on the cloud console as well as when accessing the K8s cluster via kubeconfig. When Admin creds are required during an incident, it’s requested in a just-in-time (JIT) way using the Lumos autonomous identity platform for a fixed period of time, which allows the engineers full access to manage the cluster infrastructure.

In this article, we’ll explore how we implemented the above RBAC across AWS, GCP, and Azure, while also ensuring that engineers can access Kubernetes clusters programmatically via kubeconfig.

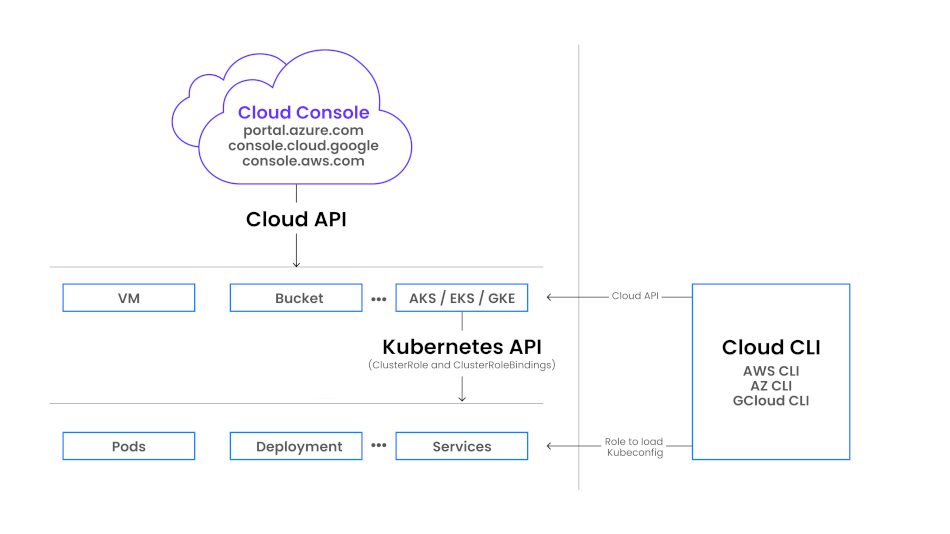

Understanding the Layers of Access

There are two key authorization layers in the context of Kubernetes on cloud

Cloud Resource Level (Cloud RBAC) Controls access to cloud resources (e.g., the ability to list clusters or look at instances).

Kubernetes API Level (K8s RBAC) Controls access to Kubernetes resources (pods, services, etc.) via ClusterRoles and ClusterRoleBindings.

To connect these layers, users need to be able to load and authenticate kubeconfigs via CLI tools. This link requires specific permissions at both the Cloud Provider and Kubernetes RBAC levels.

The RBAC authorization layers for CockroachDB Cloud Console and Cloud CLI.

Common Steps Across Cloud Providers

For all three cloud providers, the high-level process is as follows:

Grant Reader access at the cloud project/account level.

Set Reader permissions at the Kubernetes API level.

Grant permission to load and authenticate the kubeconfig.

AWS (Amazon Web Services)

In AWS, we use the assume-role flow. A role named ClusterReader is created to encapsulate both cloud-level and Kubernetes-level read permissions.

Step 1: Grant Console/CLI Reader Access

To view resources on the aws console and cli there is an AWS managed policy – ViewOnlyAccess.

Step 2: Kubernetes ClusterRole and ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-reader

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["*"]

verbs: ["get", "list", "watch"]

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-reader-role-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-reader

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: ClusterReader

Note: the User name (ClusterReader) above should match with the role name you created for the reader role in Step 1.

Step 3: Load Kubeconfig via CLI

To load a kubeconfig, the following IAM permissions must be added to the role:

eks:DescribeClustereks:ListClusters

Loading the kubeconfig:

aws eks update-kubeconfig --region <region-code> --name <cluster-name>Additionally, to authenticate with the Kubernetes API, the IAM role must be mapped in the aws-auth ConfigMap:

apiVersion: v1

data:

mapRoles: |

- rolearn: arn:aws:iam::<account-id>:role/ClusterReader

username: ClusterReader

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

The IAM role name maps to a Kubernetes username, which is then referenced in the ClusterRoleBinding.

Auth Propagation

IAM Role name → aws-auth user → ClusterRoleBinding → ClusterRoleGCP (Google Cloud Platform)

GCP has the most seamless integration of the three providers.

Step 1: Assign Cloud Role

Assign the built-in GCP role roles/viewer, which already includes permissions to read Kubernetes API resources.

gcloud iam roles describe roles/viewerStep 2: Verify Kubernetes Permissions

This role includes container-level permissions such as:

gcloud iam roles describe roles/viewer | grep containerYou’ll see entries like container.pods.get, container.pods.list, etc.

Step 3: Load Kubeconfig

To load the kubeconfig, users need one additional permission:

container.clusters.getCredentials

Once assigned, users can run:

gcloud container clusters get-credentials <cluster-name> --zone <zone> --project <project-id>No additional Kubernetes RBAC configuration is required — access is fully governed by GCP IAM roles.

Azure (AKS - Azure Kubernetes Service)

Azure requires setting up permissions at both the Azure RBAC and Kubernetes RBAC levels, similar to AWS.

Step 1: Assign Azure Roles

For reading cloud resources, there is a built–in role Reader

Step 2: Define Kubernetes ClusterRole and Binding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-reader

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["*"]

verbs: ["get", "list", "watch"]

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-reader-role-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-reader

subjects:

- kind: Group

apiGroup: rbac.authorization.k8s.io

name: <Entra-Reader-Group-Object-ID>

Note: users who are part of the above reader group will be allowed readonly access to the cluster

Step 3:

To allow the user to load the kubeconfig, an additional role will need to be assigned - Azure Kubernetes Service Cluster User Role

As we saw, AWS uses aws-auth config map for user authentication. In the case of Azure it can be offloaded to Entra by enabling the AKS-Entra integration

To enable it on an existing AKS cluster:

az aks update \

--resource-group <resource-group> \

--name <cluster-name> \

--enable-aad \

--aad-admin-group-object-ids <id-1>,<id-2> \

[--aad-tenant-id <tenant-id>]

The users who are part of the aad-admin-group will be getting full control of the cluster.

This allows Entra group members to authenticate and map into Kubernetes users/groups as per the ClusterRoleBindings.

Consistent RBAC Strategy=Security and Stability

Navigating access controls across AWS, GCP, and Azure can feel like jumping timelines: Each cloud provider has its own take on permissions, roles, and Kubernetes integrations.

By applying a consistent RBAC strategy, however, we can ensure a secure and scalable foundation that keeps engineers productive without sacrificing operational safety. The key is mapping cloud IAM roles cleanly to Kubernetes access, and establishing JIT workflows for temporary privilege elevation when needed.

We’ve summarized the main RBAC components across providers in the chart below. Whether you’re implementing access controls for the first time or just need to double-check which role unlocks what in your cloud setup, use this chart as a quick reference for implementing and comparing RBAC across cloud providers.

Ready to get hands-on with secure access controls in the cloud? Spin up a free CockroachDB cluster today.

Vishal Jaishankar is SRE, Cockroach Labs.