Transaction isolation levels once ruined my kids’ Christmas.

Every year my kids make a “Santa Shopping” list of their most desired holiday gifts. In December 2022, only a single item was on that list: Sony’s long-awaited PlayStation5 gaming console.

PS5s were impossible to get. They sold out online instantly. Desperate shoppers camped outside retail stores hoping to score one of the coveted consoles, which Sony released at unannounced intervals.

No way I was going to let Santa down. I spent nights and weekends (and, I’ll admit, more than a few weekday work hours) obsessively trying to track down a PS5, with absolutely zero luck. December 25th was but a week away when I finally got a hot tip: Costco.com just dropped PlayStation bundles! Within 60 seconds I had logged onto the site and put one in my shopping basket, thrilled at my incredible luck. Then I tried to check out. Purchase cannot be completed. Item out of stock. 💔

Costco’s inventory management database was not updating in real time to accurately reflect product availability. And I was left yelling at my screen in helpless frustration.

This, friends, is why transaction isolation levels matter.

What are transaction isolation levels?

Transaction isolation levels are how SQL databases solve data reading problems in concurrent transactions. That is, when one transaction reads the same data that another transaction is simultaneously changing. Like when thousands of shoppers try to access Costco’s suddenly-added PlayStation inventory all at the same time — and the database allows Costco.com shoppers to add a PS5 to their cart as if it is an in-stock item, when they are actually completely sold out.

Isolation levels, then, determine how much of your transaction is affected by other concurrent transactions. The higher the isolation level, the less affected it is. But there is a tradeoff between data accuracy and performance: higher levels of isolation give higher accuracy, but possibly at a slower speed.

There are four possible isolation levels defined in the ANSI SQL standard. These levels are designed to balance the need for concurrency and performance with the need for consistency and accuracy. Thus, any SQL-based RDBMS — whether it’s on-prem Oracle, a cloud-based Postgres service, or a fully distributed cloud native SQL database — will provide the same isolation options, even if the RDBMSs themselves work very differently.

SQL isolation levels, explained

The four isolation levels defined in the SQL standard, in increasing order of isolation attained for a given transaction, are READ UNCOMMITTED, READ COMMITTED, REPEATABLE READ, and SERIALIZABLE.

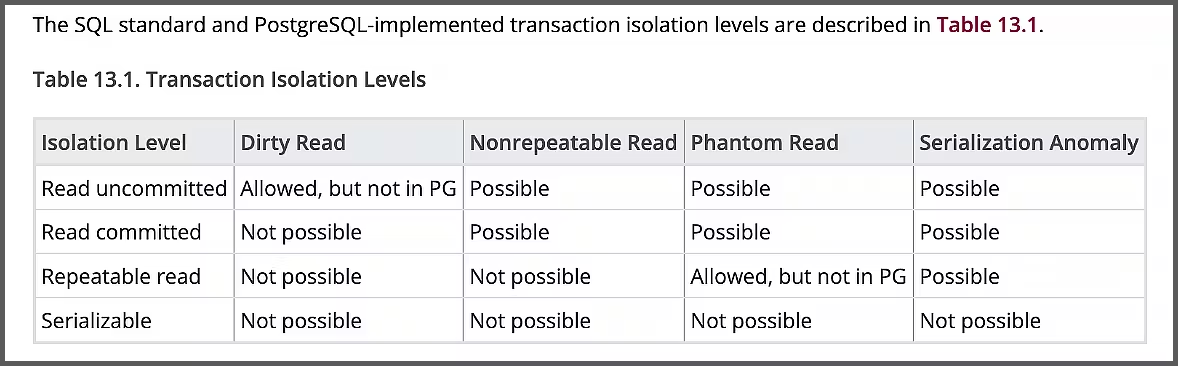

The table below, from PostgreSQL 16, shows the SQL standard isolation levels — and any data anomalies they may allow — as implemented in Postgres. Just about every other relational database has something similar in their documentation.

In general, the rule of thumb is to use the lowest level that gives your application the consistency it needs. Here’s a rundown of each of the four isolation levels, and which use cases are a good match.

READ UNCOMMITTED

`READ UNCOMMITTED` is the weakest level of isolation in a database. Few modern databases even implement it. It’s still worth a quick look, though, because the problems that occur with weakly isolated transactions make it clear why higher levels of isolation are needed.

READ UNCOMMITTED is level 0 locking, which means that no shared locks are issued and no exclusive locks are honored. This isolation level allows dirty reads, i.e., where a transaction may see uncommitted changes made by some other transaction. This means values in the data can be changed and rows can appear or disappear in the data set before the transaction completes.

The tl;dr: In general, don’t use READ UNCOMMITTED. The one and only time it ever makes sense is when you are reading data that will never be modified in any way. For example, if you need to scan a large volume of data to generate high-level analytics or summaries, and absolute moment-of-query accuracy is not critical.

READ COMMITTED

READ COMMITTED is the weakest isolation level implemented by most databases. It is the default isolation level in most Postgres databases, and older SQL databases in general.

This level of isolation allows the transaction to see different versions of the data in different statements. READ COMMITTED restricts the reader from seeing any intermediate, uncommitted, ‘dirty’ read during a transaction and guarantees that any data read was committed at the moment it was read.

This is what makes READ COMMITTED a popular default option, because this effectively eliminates most transaction retry errors (when a transaction cannot be completed and so is aborted and tried again). In most cases where a transaction with a higher level of isolation would return a retry error, a READ COMMITTED transaction can retry only the current statement — and this retry can be handled invisibly on the server.

READ COMMITTED is a good choice if:

You are migrating an application that was originally developed for a different database that used this isolation level.

It is difficult to modify the application to handle transaction retry errors

Consistently low latency is important for the application and full transaction retries are too expensive.

One use case example where READ COMMITTEDis the best option would be a retail application that displays the available inventory of products. It’s a good balance between consistency and concurrency in situations where it’s important to see only committed data (so, no dirty reads) but minor inconsistencies between reads (like non-repeatable reads) are acceptable. When displaying available inventory to customers, most ecommerce applications can afford momentary slight inaccuracies caused by concurrent updates, as long as the data is not completely out of date or showing uncommitted data.

It is important to note that READ COMMITTED makes no promise whatsoever that, if the transaction re-issues the read, it will find the same data. In this level of isolation, data is free to change in the interval after it was read but before the transaction completes — leading to the database anomaly known as nonrepeatable reads.

REPEATABLE READ

REPEATABLE READ is an intermediate level of isolation that inherits some of the drawbacks of both its weaker and stronger neighbors. As on the weaker side of the isolation spectrum, explicit locks (including SELECT FOR UPDATE) are still sometimes required to prevent data anomalies. And, as in the stronger isolation levels, application-visible transaction retry errors can occur. So why would you ever use it?

Because REPEATABLE READ is the ideal isolation level for read-only transactions.

Using the lower isolation level of READ COMMITTED for a series of read-only statements is inefficient, because it will essentially run each of those statements in their own individual, single-statement transaction. SERIALIZABLE isolation is also inefficient because it will do extra work to detect read/write conflicts that cannot possibly exist in a read-only transaction.

So, for example, REPEATABLE READ would be a good choice for a financial app that calculates the total balance of a user’s accounts. This isolation level ensures that if a row is read twice in the same transaction, it will return the same value each time, preventing the nonrepeatable read anomaly mentioned above. In this case, when calculating a user’s total balance, REPEATABLE READ guarantees that the balance does not change during the calculation process due to concurrent updates.

Again: REPEATABLE READ is your friend in read-only transactions. For read-write transactions, though, choose either serializable or read committed isolation levels.

SERIALIZABLE

SERIALIZABLEis the highest level of isolation (and the default isolation level in CockroachDB). Transactions are completely isolated from each other, effectively serializing access to the database to prevent dirty reads, non-repeatable reads, and phantom reads.

The good news is that it prevents any and all isolation anomalies: SERIALIZABLEisolation provides a rigorous guarantee that each transaction sees a wholly consistent view of the database, even when there are concurrent transactions. The not-so-good news is that, since it is the most conservative in detecting potential interference between transactions, this isolation produces more transaction retry errors…And the cost of redoing complex transactions can be significant.

Still, you go SERIALIZABLE when your application’s transaction logic is sufficiently complex that using READ COMMITTED could result in anomalies. SERIALIZABLE isolation is absolutely necessary when a transaction executes several successive commands that all must see identical views of the database.

SERIALIZABLE is a good choice if:

Data accuracy is paramount and you don’t want to risk anomalies due to missing

FOR UPDATElocks.You are able to use abstractions in your application that let you avoid repeating the retry loop code throughout the app.

You have a retry loop at another level that obviates the need for retries in the app server. For example, a mobile app will often retry failed API calls to cover for flaky networks, and this retry can also cover issues related to serializability.

A typical use case for when SERIALIZABLE is the best option would be a banking or financial payments system that processes transactions that transfer money between accounts.

As the highest level of isolation, it’s best suited for scenarios requiring strict consistency and where the integrity of each transaction is critical. After all, when transferring money between accounts, it’s essential that the amount that leaves Account A arrives intact at Account B with no other transactions interfering. Data anomalies are never good, but they are 10x worse when causing problems like incorrect or missing funds in your bank account.

Why transaction isolation levels matter

From the chaos of online shopping frenzies on platforms like Costco.com to the meticulous calculations in financial applications, the choice of isolation level directly impacts your users’ experience. Every transactional database needs to establish a balance between accuracy and performance, and isolation levels have a direct — though often unrecognized — impact on both.

Unfortunately, builders rarely think about their application in terms of isolation levels. In practice, most people just run with the defaults until they hit a problem, then they consider moving up or down one step. However, starting out with the best-fit isolation level for your app is no more difficult than simply going with whatever the built-in default is.

The practical developer understands the tradeoffs for each of the isolation levels. They choose in part for the anomalies the various levels prevent or allow, but more importantly for the impact the isolation level will have on their application: Higher isolation levels ensure data consistency, with a potential performance cost due to increased conflict and retry rates. Conversely, lower isolation levels like READ UNCOMMITTED prioritize performance and concurrency at the expense of data accuracy, allowing phenomena such as dirty reads.

Using lower isolation levels safely requires careful use of explicit locks, while high isolation levels may require transaction retries to prevent anomalies. This simple decision framework will help you choose the right isolation level for your application. And maybe even someday help save a few Christmases when the PlayStation 6 gets released.