This article is the latest in “Mainframe to Distributed SQL”, an original series from Cockroach Labs exploring the fundamentals of mainframes and the evolution of today’s distributed database architecture. Please visit here for the other installments:

As businesses increasingly demand scalability, resilience, and flexibility, transitioning from traditional centralized systems to distributed architectures has become a critical strategic move.

Distributed architectures offer unparalleled advantages, including the ability to handle massive workloads, ensure high availability, and support seamless global operations. However, making this transition requires thoughtful planning, robust strategies, and a deep understanding of how to adapt existing systems for a distributed model.

This article explores:

The key aspects of transitioning to a distributed architecture

Focusing on strategies for migrating mainframe databases

Overcoming common challenges, and leveraging the full potential of distributed systems

Whether you're looking to modernize legacy infrastructure, enable cloud adoption, or scale operations to meet growing demands, you’ll learn insights to help you navigate the journey toward a distributed, future-ready architecture.

Mainframe database migration approaches: comparing strategies for distributed database adoption

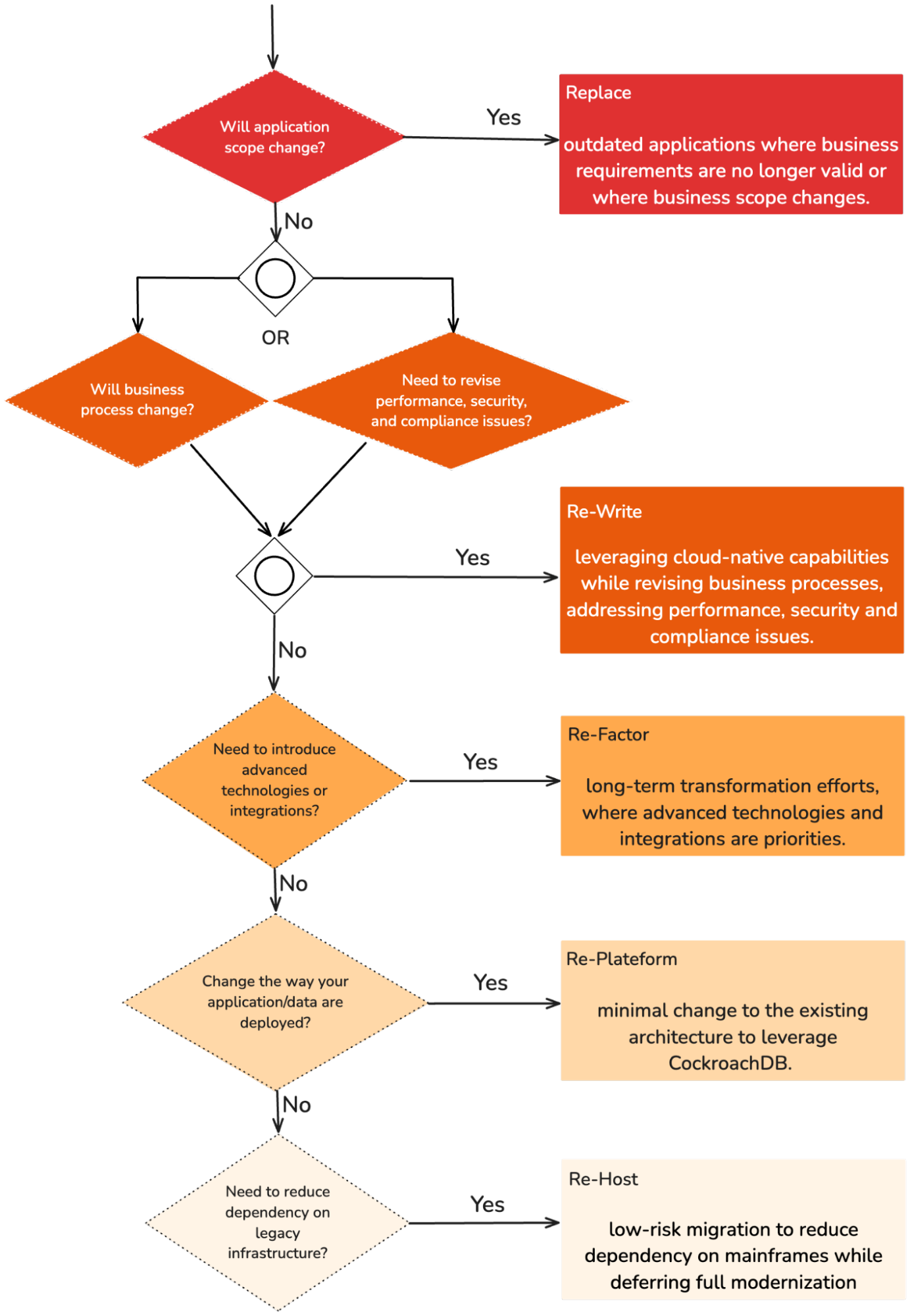

Migrating mainframe databases to modern distributed systems is a critical step in achieving scalability, cost-efficiency, and agility. However, the approach to migration significantly impacts the complexity, timeline, and ultimate success of the transition. This section compares common migration strategies such as rehosting, replatforming, refactoring, and rewriting (aka, rebuilding), then examines their implications for adopting distributed databases.

Rehosting migration

What is rehosting? The rehosting or "lift-and-shift" migration approach involves moving an existing legacy application and its associated data from mainframe to a modern infrastructure (e.g. cloud) with minimal changes to its architecture or codebase. This approach solves the issue of expensive maintenance by moving the system from its legacy hardware to modern hosting solutions.

If for some reason your data needs to be hosted on-premises, you can move from the old mainframes to more modern hardware. This can resolve the issue of low performance as well as expensive maintenance without changing employees’ working environment.

For instance, this approach often focuses on replicating the on-premises environment within the cloud infrastructure, enabling organizations to migrate quickly without extensive redevelopment efforts.

The approach emphasizes infrastructure replication, where virtual machines, servers, and configurations are mirrored in the cloud, allowing for a seamless transition while preserving the application's existing functionality.

Despite its benefits, rehosting also has several disadvantages that organizations should consider. One major drawback is that applications designed for on-premises environments may not fully utilize cloud-native features, such as auto-scaling, serverless capabilities, or managed services, leading to suboptimal cloud utilization. This can result in higher operating costs, as resource usage may not be optimized for the cloud environment.

Additionally, performance improvements are often limited since the application is not re-architected to leverage cloud-specific capabilities. Dependency challenges may also arise, particularly if the application relies on legacy systems that are difficult to replicate or integrate in the cloud. These limitations can impact the long-term efficiency and cost-effectiveness of the rehosting approach.

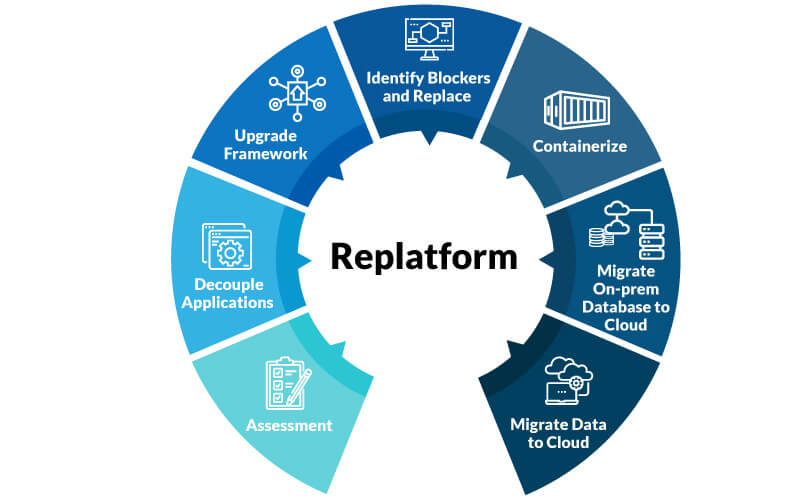

Replatforming migration

What is replatforming? The replatforming approach involves moving the mainframe database to a modern environment with minimal changes to the database structure or application logic while making targeted optimizations. The goal is to replicate the mainframe’s functionality in a new infrastructure, often cloud-based or distributed. This may include shifting from hierarchical or network database models to relational or NoSQL systems, adjusting the schema, or modifying application queries for improved compatibility.

Adopting distributed databases has significant implications for organizations transitioning from legacy systems. While minimal optimization during replatforming can leave some limitations of the legacy architecture intact, strategic modifications to schemas and applications can vastly improve integration with distributed systems. These optimizations enable organizations to leverage key distributed database features, such as data partitioning, replication, and failover, enhancing scalability, reliability, and overall system resilience in modern environments.

Migrating to distributed databases through the replatforming approach offers several advantages, making it an appealing choice for organizations.

It delivers significant performance gains by enhancing efficiency with minimal structural changes, ensuring a faster migration process by avoiding extensive modifications. This approach is also cost-effective, requiring a lower upfront investment compared to more complex methods.

By reducing vendor lock-in, it frees organizations from dependence on proprietary mainframe technologies.

Additionally, retaining the existing business logic minimizes operational disruption, lowering the risks associated with migration.

With a moderate cost and complexity, replatforming strikes a balance between modernization efforts and a manageable migration scope, making it a practical solution for many enterprises.

Common changes made during application replatforming include exposing service methods as microservices or macrosystems, identifying and addressing code blockers that are incompatible with cloud environments by replacing them with optimized, cloud-compatible alternatives, and migrating on-premise databases to distributed databases when necessary.

These adjustments enable the use of essential cloud features, such as auto-dynamic scaling and auto-scaling, which enhance performance and resource efficiency. This approach strikes a balance by overcoming the time-intensive nature of rewriting and the feature limitations associated with rehosting, allowing organizations to leverage the full potential of cloud environments efficiently.

While replatforming to a distributed architecture offers numerous benefits, it has its own challenges. Limited modernization can leave existing inefficiencies and technical debt intact, hindering the full realization of potential improvements. There is also a risk of downtime during migration, which can disrupt operations if not carefully managed.

Compatibility issues may arise, as some features or configurations of legacy databases may not align seamlessly with distributed environments. Additionally, the process requires technical expertise, as modifying queries and adapting architecture demand skilled personnel to ensure a smooth transition. Addressing these challenges is crucial to maximizing the success of this kind of migration.

Refactoring migration

What is refactoring? Refactoring refers to the process of improving the internal structure or design of existing code without altering its external behavior. The focus is on code-level changes, such as improving readability, removing redundancies, or optimizing performance.

For the scope of this article, when we talk about refactoring mainframe applications, we mean redesigning the applications to fully leverage the capabilities of a distributed system. This approach often requires a fundamental shift from legacy database models to modern distributed database architectures like CockroachDB and involves breaking down a legacy, monolithic application into a series of smaller and independent components, and transitioning to a microservice architecture. It can also involve migrating the application to a serverless architecture.

Adopting distributed databases using this approach unlocks their full potential, offering powerful capabilities such as horizontal scaling, fault tolerance, and geo-distribution. These features enable organizations to build highly resilient and scalable systems that can seamlessly handle growing workloads and distributed operations. Furthermore, distributed databases facilitate integration with modern applications and emerging technologies, including artificial intelligence and machine learning, positioning organizations to innovate and thrive in a rapidly evolving technological landscape.

Refactoring is one of the most demanding migration strategies in terms of time and cost, but it offers unparalleled benefits when fully leveraging the advantages of distributed cloud computing. This approach significantly enhances performance, responsiveness, and availability, while reducing technical debt and increasing system flexibility.

By embracing refactoring, organizations can future-proof their applications, positioning themselves to adopt innovative technologies and expand seamlessly. The enhanced scalability of distributed databases allows for handling massive workloads and geo-distributed operations effectively.

Additionally, refactoring facilitates the integration of new features, paving the way for ongoing improvements and ensuring long-term adaptability in an evolving technological landscape.

Refactoring comes with notable challenges that organizations must carefully consider: Its high complexity demands significant time, resources, and expertise, making it one of the most resource-intensive migration strategies.

Additionally, there is a greater risk of operational disruptions during the transition, which requires meticulous planning and execution to mitigate. The initial investment can also be substantial, both financially and in terms of human capital, making it critical for organizations to assess their readiness and long-term goals before undertaking this approach.

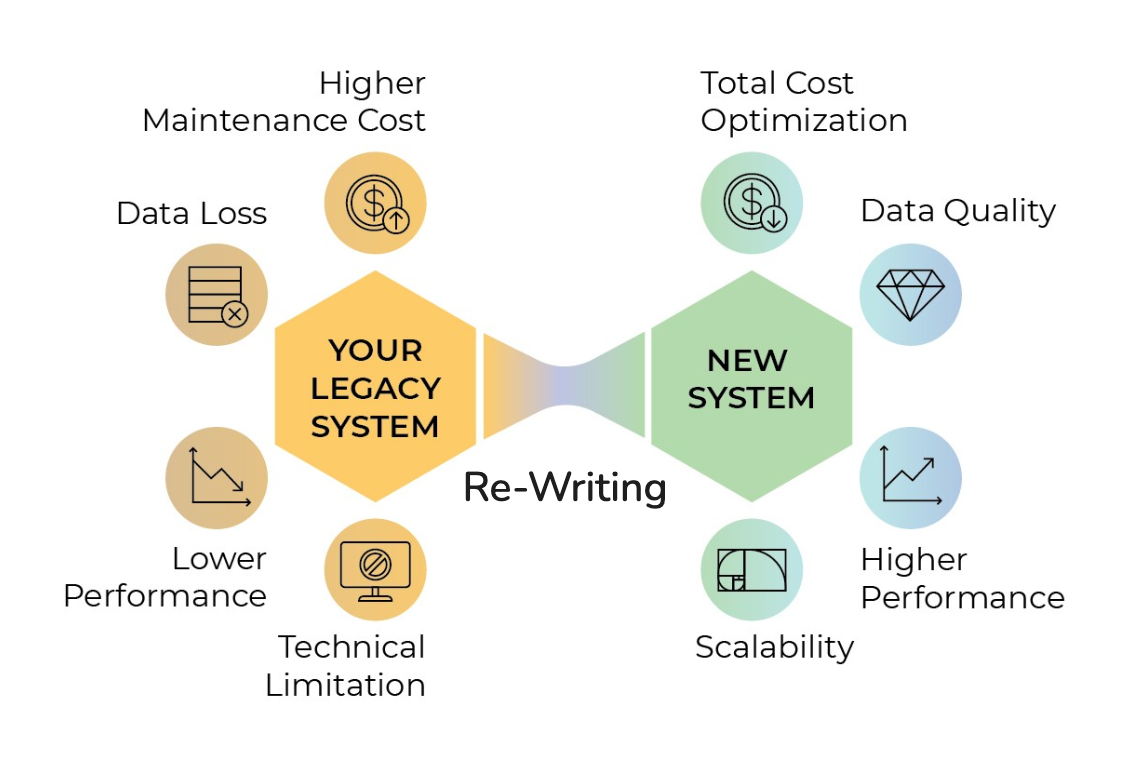

Rewriting migration

What is rewriting? Using this approach, a legacy application is transformed into a modernized version by rewriting, and/or re-architecting its components from the ground up to fully leverage the capabilities of a modern platform.

This process involves redeveloping the application entirely, enabling it to be deployed in a distributed environment and take advantage of all cloud-native features. Rewriting entails reconstructing the application using the latest technology stack while refactoring its framework for improved performance and scalability.

Certain applications and their dependencies are tied to frameworks that are incompatible with distributed environments. Additionally, some legacy applications rely on resource-intensive processes, leading to increased costs and higher hardware billing due to the large volume of data processing. In such cases, replatforming or refactoring may not be viable options for mainframe migration. Instead, rebuilding the application from scratch is often the best approach to optimize resource utilization and achieve cost efficiency.

Rewriting a mainframe application offers several key benefits, especially in the context of mainframe migration.

It allows the integration of new features and significantly improves the application's performance by optimizing it for modern distributed environments. This approach ensures the application fully leverages the advantages of the ecosystem of a distributed database such as CockroachDB, including scalability, reliability, resilience and geo-distribution.

By building a service-oriented architecture from scratch, rewriting enables modular and efficient design, enhancing maintainability and adaptability. Additionally, it incorporates the latest cloud technologies, ensuring the application remains cutting-edge and aligned with industry best practices.

Ultimately, rewriting your legacy system is a perfect opportunity to revise the business process and its efficiency. As we discussed in an earlier article, “The Imperative for Change”, rewriting the legacy system first entails a detailed business process analysis and definition of tasks outside the software system. In this way, you can detect some inconsistencies and make needed improvements.

However, rewriting comes with certain disadvantages that organizations must consider. It is a time-consuming and costly process, requiring significant investment in both resources and expertise. Rebuilding a legacy system also demands a deep understanding of the existing workflows, which can be particularly challenging if the application was developed many years ago using outdated frameworks.

The complexity increases when the rewritten application depends on other legacy systems, as these interdependencies must be thoroughly analyzed and addressed. Additionally, teams involved in the rewrite often must upgrade their skills to work with the latest technologies, adding to the overall effort and learning curve.

Since the process is highly complex, rewriting comes with increased risks of data loss, coding errors, cost overruns, and extensive project delays. For example, a single bug in the rewritten system can have severe consequences, potentially disrupting critical business operations. The most well-known rewriting failure is the old payroll system for Queensland Health in Australia by IBM: The project, originally estimated at $6 million AUD, failed when it was rolled out, leading to absolute chaos in payments. In the end, the Queensland government spent $1.2 billion AUD to fix the situation!

Additionally, rewriting requires deep domain knowledge of the legacy system, which can be challenging if the original developers are no longer available or if documentation is incomplete. There is also the risk that the new system may not fully replicate the functionality of the old one due to gaps in the planning or design stages, potentially forcing companies to operate both systems in parallel, which is inefficient and costly.

Furthermore, the high investment of time, money, and resources makes rewriting a risky endeavor, particularly for businesses without a clear roadmap for implementation and testing. According to Standish Group, more than 70% of legacy application rewrites are not successful. The 2020 Mainframe Modernization Business Barometer report found that 74% of organizations have started a legacy system modernization project, but failed to complete it.

“Rewriting a business application is as arduous as the old process of republishing a manuscript, if not more so.” TMAXSOFT

Choosing the right approach

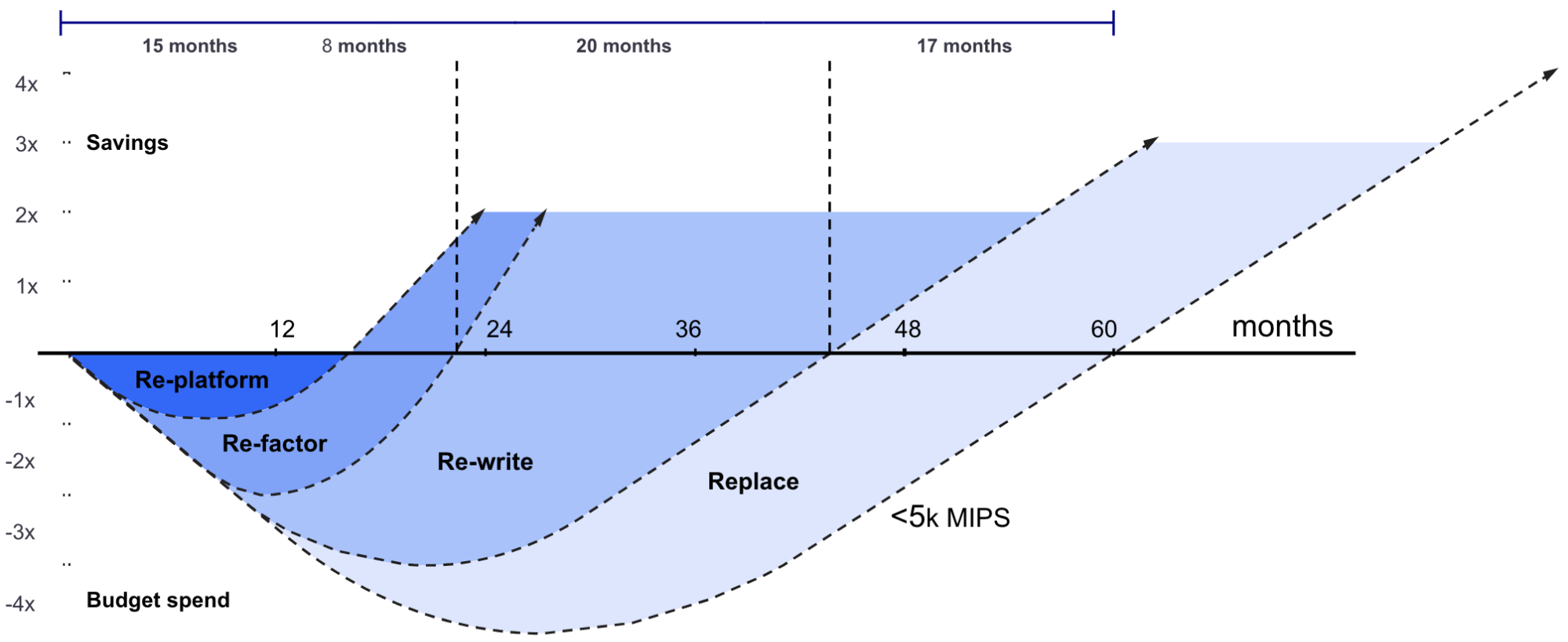

Let’s look at it another way – the visual representation below represents the cost and savings dynamics associated with different mainframe database migration approaches over time.

The vertical axis represents the financial impact: Negative values indicate budget spending, while positive values represent realized savings. The horizontal axis measures time in months, showing the long-term nature of migration projects, which typically span multiple years.

The graph illustrates how different migration approaches impact budget spending and savings over specific durations:

Replatforming (15 months): The least expensive migration option in the short term after re-hosting, it involves minimal changes to the existing architecture, making it a cost-effective choice for organizations. Replatforming allows for a quick transition from upfront budget spending to significant cost savings, often showing a sharp upward trajectory in financial benefits within 15 months. This approach is particularly well-suited for organizations with limited budgets, or those prioritizing a fast migration process with minimal disruption to ongoing operations.

Refactoring (8 months post-replatforming): The refactoring migration approach involves targeted optimizations that go beyond replatforming, requiring additional investment. While savings take longer to materialize, typically starting after the 24-month mark, this gradual return on investment makes it a suitable option for organizations aiming to improve system performance without undertaking a full architectural redesign.

Re-writing (20 months post-replatforming): Rebuilding represents a comprehensive architectural overhaul that involves significant upfront costs but delivers substantial long-term benefits. Savings typically emerge after 36 months, reflecting the advantages of a fully modernized system. This approach is ideal for organizations seeking to leverage distributed database capabilities and achieve a highly scalable, future-proof architecture.

Replacing (48 months and up): Replacing is the most costly and time-intensive migration approach, involving the complete replacement of legacy systems. While significant savings typically appear after 60 months, this highlights its long-term value for businesses prepared to adopt entirely new systems. It is best suited for organizations with outdated mainframe systems that are unable to meet modern requirements.

Each migration approach offers progressively higher savings as the extent of modernization increases. By the 60-month mark, strategies such as rewriting and replacing legacy systems yield the highest returns, though they require significant upfront investment to achieve these long-term benefits.

Adopting distributed databases while modernizing mainframe systems, varies in complexity and benefits across migration strategies:

Replatforming serves as an entry point, enabling quick wins with minimal changes but limiting the full advantages of distributed architectures, such as elasticity and resilience.

Refactoring optimizes systems to better utilize distributed features like partitioning and horizontal scalability.

Rewriting ensures full compatibility with distributed databases, designing systems for high performance, fault tolerance, and global distribution.

Understanding the tradeoffs between lift-and-shift, re-platforming, and re-architecting is critical for successful mainframe database migration. While lift-and-shift provides a quick fix, replatforming and rearchitecting offer deeper modernization benefits that align better with the capabilities of distributed databases. Organizations must carefully assess their unique requirements and readiness to adopt distributed systems, ensuring a migration strategy that delivers both immediate results and long-term value.

Selecting the right migration strategy depends on various factors, including the organization’s goals, technical readiness, and budget:

Rehosting: Ideal for organizations seeking a fast, low-risk migration to reduce dependency on mainframes while deferring full modernization

Replatforming: Suitable for businesses aiming to strike a balance between modernization and complexity, particularly when leveraging distributed databases for specific workloads

Refactoring: Best for long-term transformation efforts, where scalability, resilience, and integration with advanced technologies are priorities

Rewriting: Particularly well-suited for organizations requiring significant performance improvements, scalability, and leveraging cloud-native capabilities while revising business processes, addressing security and compliance issues

When deciding how to modernize your legacy system, it's crucial to understand the crossroads with all possible paths, their specific use cases, and their respective advantages. Should you rewrite your system? Should you consider refactoring your system? Or should you simply change the way your application is hosted or deployed?

However, simply knowing the principles of software modernization is not sufficient; without prior experience in the field, making the right decision can be exceptionally challenging. Don’t hesitate to reach out to our solution architects at Cockroach Labs to help move you in the right direction. We can conduct in-depth analyses of the project’s technical base, performance, and business processes to help you analyze the software’s current state, file a detailed report, make a list of needed improvements and prioritize the modernization approach. Every case is unique and requires an individual strategy.

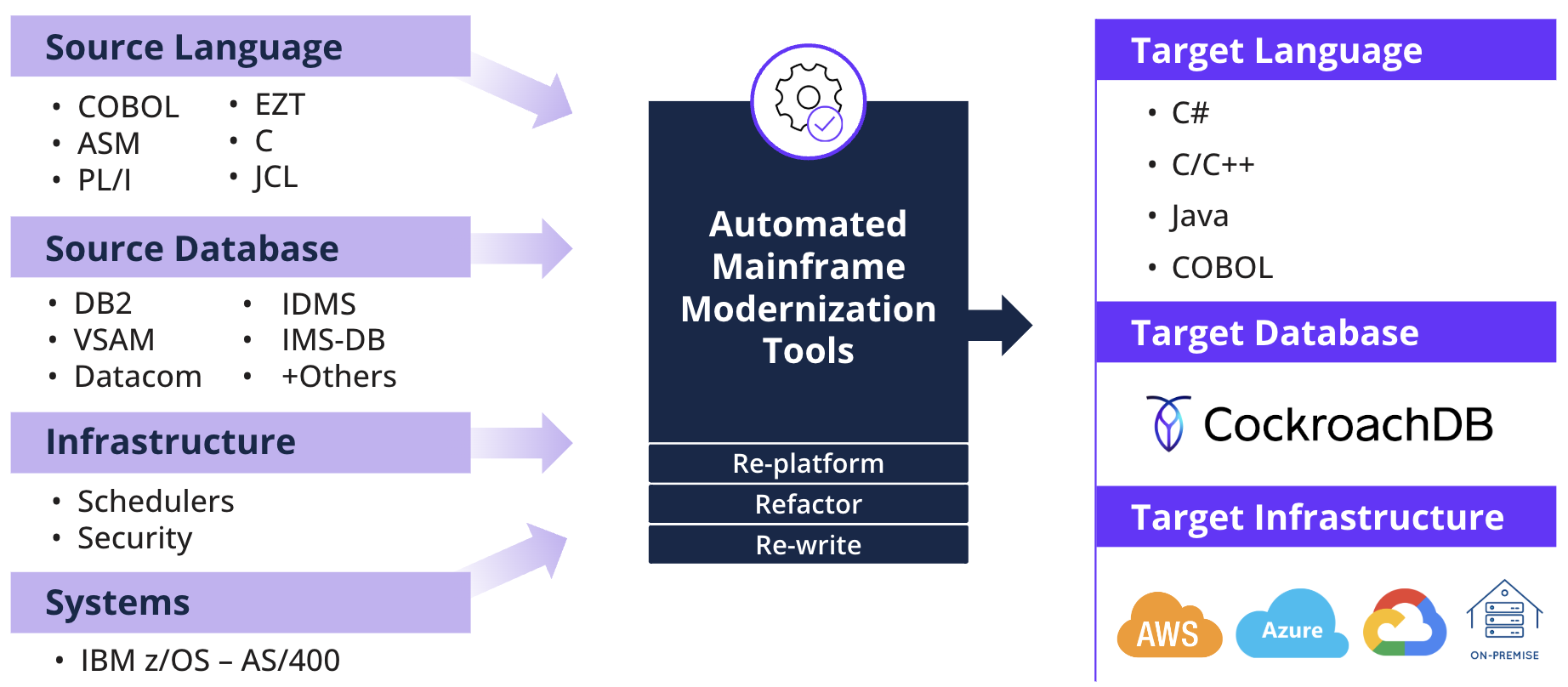

Tooling and technologies: Facilitating seamless migration to distributed architectures

Transitioning to a distributed architecture involves complex processes that require the right tools, frameworks, and methodologies to ensure success. Leveraging the appropriate technology stack not only simplifies migration but also minimizes risks, optimizes performance, and ensures that the new system meets modern scalability and reliability requirements. Below is an overview of key tools and technologies that play a pivotal role in facilitating the migration process.

Mainframe modernization suites

Automation is at the heart of modern migration strategies. Tools designed for migration not only analyze the current state of legacy systems but also facilitate the conversion of outdated codebases, data structures, and processes into scalable, distributed formats. These tools eliminate the need for extensive manual intervention, ensuring accuracy and speed.

For instance, tools like mLogica’s LIBER*M Mainframe Modernization Suite is an end-to-end platform that automates critical phases of the migration process. The suite includes:

LIBER*DAHLIA: This tool performs detailed assessments of legacy systems to identify dependencies, complexities, and components that require migration.

LIBER*TULIP: This component automates the generation of target data definition language (DDL), enabling smooth database migrations.

Bridge Program Generators: These tools create temporary interfaces between legacy systems and new platforms, ensuring uninterrupted operations during migration.

mLogica’s solutions emphasize minimal downtime, which is crucial for mission-critical systems. By leveraging these tools, organizations can transform complex on-premises mainframes into agile, distributed cloud architectures.

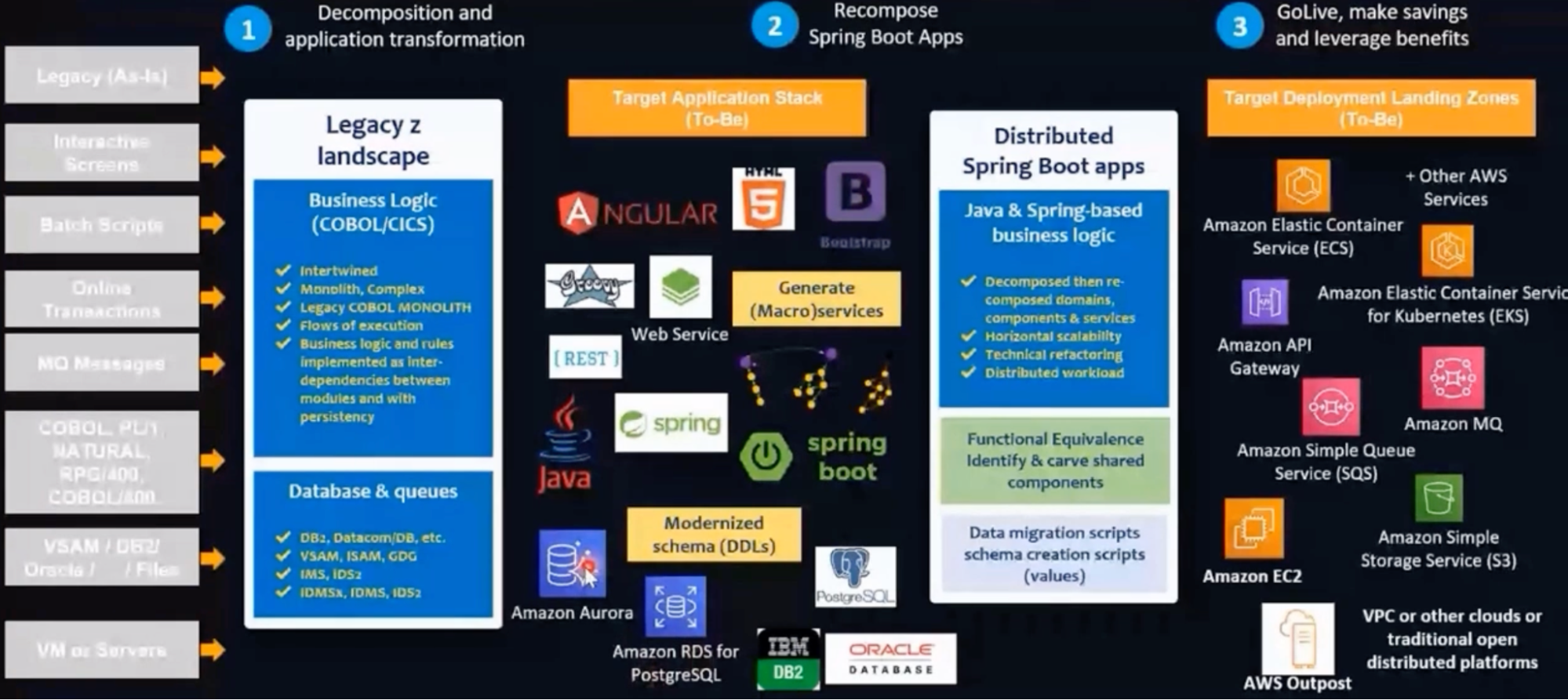

Other tools like BluAge - now integrated as part of the AWS Mainframe Migration Service - focus on automating the reengineering of legacy systems. Their technology suite is designed to convert legacy code and data into modern languages and frameworks. Features include:

Source Code Analysis: BluAge identifies dependencies, redundancies, and opportunities for optimization within the existing codebase.

Automated Conversion: Legacy code is converted to modern languages, such as Java or C#, ensuring compatibility with distributed architectures.

Data Modernization: Tools transform legacy data into formats optimized for distributed databases, enabling features like horizontal scaling and partitioning.

BluAge’s approach has been implemented in over 100 large-scale projects, demonstrating its reliability and efficiency in reducing risks and project timelines.

Mainframe modernization suites are equipped with features designed to streamline and enhance the transition process. They provide comprehensive assessments to analyze the architecture, dependencies, and data flow of legacy systems, enabling the creation of detailed migration plans. Automated code and data conversion tools rewrite applications and data models into formats compatible with distributed systems.

Many tools include interim bridging solutions that allow legacy and modern architectures to coexist during the migration phase. Additional capabilities like scalability testing and seamless integration with cloud platforms further enhance the value of these tools in enabling smooth migrations.

Leveraging advanced migration tools provides numerous benefits to organizations. Automation accelerates the migration process, reducing time to market and allowing businesses to quickly realize the advantages of modernized systems. By minimizing manual intervention, these tools significantly lower the risk of errors and disruptions. Cost efficiency is another key benefit, as automation reduces the labor and time required for migration. Furthermore, the re-engineered systems are optimized for distributed environments, offering enhanced performance, scalability, and resilience to meet future demands.

While migration tools have many advantages, they also come with challenges that organizations must address:

Older legacy systems with limited documentation can complicate the migration process.

Ensuring organizational readiness is also critical, as successful adoption of distributed architectures requires skilled teams and a willingness to embrace change.

Additionally, while these tools ultimately lead to significant savings, the upfront costs for tooling, training, and infrastructure can be substantial, necessitating careful planning and resource allocation.

Data migration tools

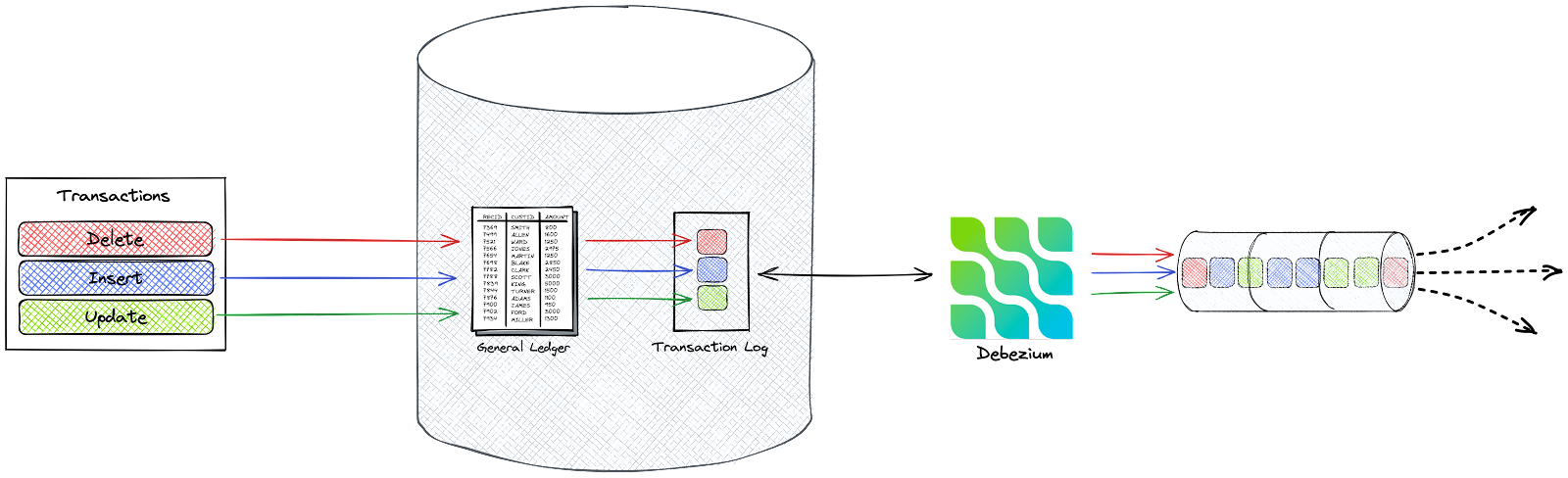

Other cloud-native tools can also be used for different tasks in the modernization journey. For example, you can use data migration tools available in the different cloud platforms to transfer data seamlessly from legacy systems to distributed databases while ensuring integrity, consistency, and minimal downtime. Tools like AWS Database Migration Service (DMS) facilitate migrations from various databases to cloud-based distributed systems with real-time replication using Change Data Capture (CDC).

What is Change Data Capture? CDC is a technique used to identify and track changes in a database in real-time or near-real-time. It captures insert, update, and delete events as they occur, recording these changes in a separate system or pipeline. This allows other applications to process the data without impacting the source database. CDC is essential for tasks like database migration because it enables timely data synchronization across systems, supports incremental data movement, and helps maintain data consistency in distributed environments.

Other cloud-agnostic tools like Qlik Replicate or Debezium offer fast bulk data migration and change data capture (CDC) capabilities, making it suitable for distributed architectures. They stream database changes to platforms like Kafka, enabling real-time updates. Key features of these tools include real-time data synchronization, support for diverse database types, and options for both incremental and full data migrations, ensuring a smooth transition to modern systems.

Schema Conversion and Compatibility Tools

Schema conversion and compatibility tools are critical for migrating databases from legacy systems to modern distributed architectures. These tools simplify the process of adapting database schemas to align with the requirements of target platforms. By automating schema analysis, conversion, and validation, they reduce manual effort, minimize errors, and ensure a seamless transition.

A key feature of these tools is automated schema analysis and conversion, which evaluates existing database schemas, identifies structural differences and incompatibilities, and converts them into formats suitable for the target system. Additionally, they perform compatibility checks to validate the converted schema against the requirements of distributed environments. Many tools also provide rollback and versioning support, enabling efficient management of schema changes throughout the migration process.

Several popular schema conversion tools are widely used in the industry. AWS Schema Conversion Tool (AWS SCT) automates schema conversion for migrating databases to AWS cloud services like Amazon RDS or Aurora. It identifies potential incompatibilities and provides recommendations for seamless integration.

Liquibase, an open-source tool, allows teams to track, version, and deploy schema updates while offering rollback functionality to fix errors during the migration. Flyway, a lightweight framework, supports version-controlled schema migrations and integrates well with CI/CD pipelines, making it an excellent choice for modern workflows. Cockroach Labs’ MOLT (Migrate Off Legacy Technology) SCT specializes in automating the migration of schemas and queries to distributed architectures, validating the converted schemas to ensure optimal functionality.

The benefits of using schema conversion tools are significant. They reduce the complexity of adapting schemas, even for highly intricate databases, while accelerating the migration process by automating repetitive tasks. This saves time and allows teams to focus on other critical activities. Automated validation improves accuracy, ensuring schemas are compatible with distributed systems and optimized for performance and scalability.

Orchestration and deployment tools

Orchestration and deployment tools are essential for managing the complexity of distributed systems. They ensure that applications are efficiently deployed, monitored, and orchestrated across various environments, from development to production.

These powerful tools streamline operations, enabling scalable, fault-tolerant, and consistent infrastructure for distributed architectures. By automating deployment processes, they minimize manual effort, reduce errors, and improve the reliability of distributed systems, especially when migrating from legacy systems.

Orchestration and deployment tools like Kubernetes, Terraform, and Helm are vital for managing the complexity of distributed systems. They enable scalable, fault-tolerant, and consistent deployments across diverse environments, reducing operational overhead and improving reliability. As distributed architectures continue to grow in prominence, leveraging these tools has become a necessity for organizations aiming to maintain competitive, modern infrastructures.

Monitoring and observability tools

Monitoring and observability tools are essential for maintaining the health, performance, and seamless operation of distributed systems post-migration. These solutions provide deep visibility into system behavior, helping teams identify and resolve issues quickly. By collecting and analyzing metrics, logs, and traces, they enable proactive management and optimization of distributed architectures.

By leveraging tools like Prometheus, Grafana, and Datadog, organizations can effectively manage the complexities of distributed architectures and ensure their systems deliver consistent value. These tools are designed for collecting and analyzing metrics, offering robust querying capabilities that make it easier to detect and diagnose system anomalies. They also provide dynamic dashboards that allow teams to monitor system performance and identify bottlenecks visually.

Methodologies and frameworks

Selecting the right methodology or framework for migrating legacy databases depends on a variety of factors, including:

system complexity

data volume

organizational priorities such as downtime tolerance and modernization goals

By leveraging advanced tools and frameworks tailored to specific use cases, organizations can ensure a smooth transition to modern database environments while minimizing risks and maximizing efficiency. Below are some commonly used approaches, their benefits, and implementation strategies.

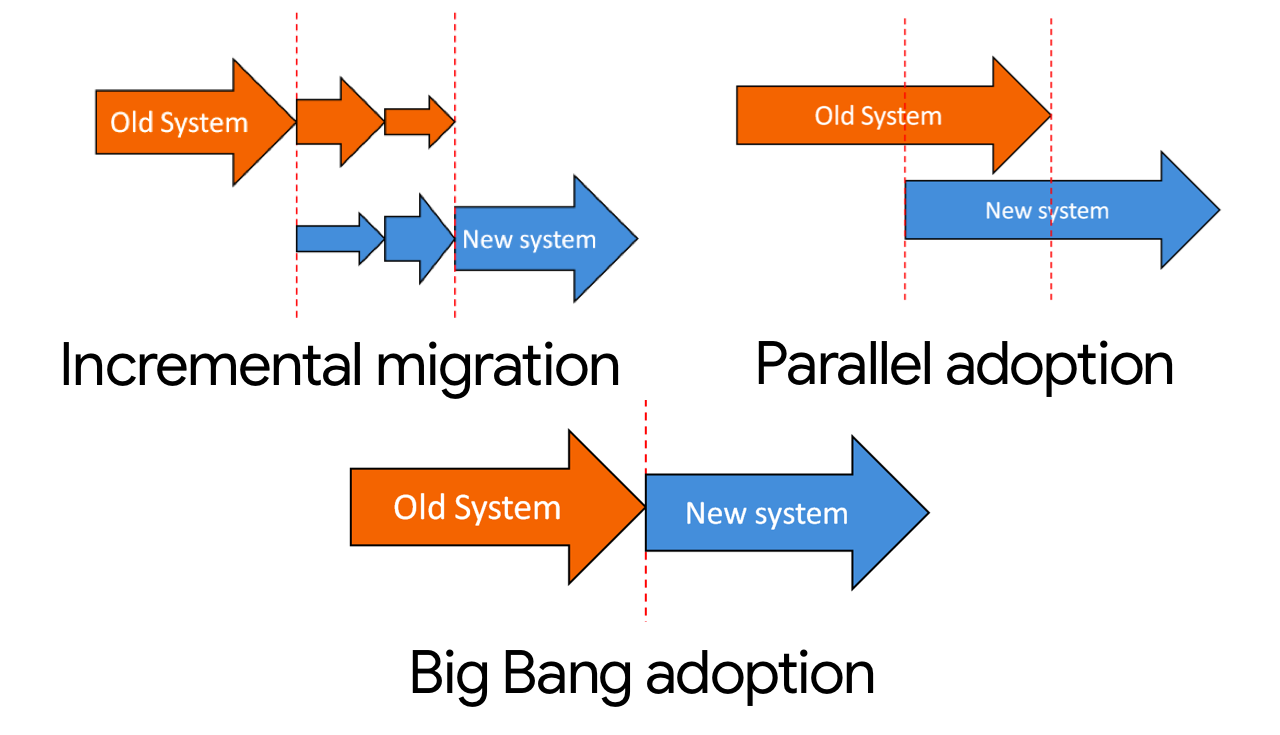

1. Big Bang migration

This method involves migrating all data from the source to the target system in a single event. It is a time-boxed approach suitable for smaller datasets or less complex systems where downtime is acceptable. The advantage lies in its shorter project duration and reduced synchronization complexity. However, it requires meticulous planning and testing to minimize risks such as data integrity issues and significant downtime during the migration process.

The lift-and-shift approach is a technical implementation for the Big Bang migration approach. It involves moving the database to a new environment with minimal changes to the existing architecture or application code. This strategy is often used for quick transitions from on-premises systems to cloud environments. While it is cost-effective and fast, it may face compatibility challenges, performance differences, and the need for updated security settings. Tools like Cockroach Labs’ MOLT Fetch and MOLT Verify help streamline this process, ensuring data consistency and reducing downtime.

Backup and Restore is also a Big Bang approach that involves creating a backup of the database in the source environment, transferring it to the target system, and restoring it there. It is ideal for homogeneous migrations (same DBMS), disaster recovery, or test environment setups. Backup and restore methods are straightforward and provide better control over the migration process. However, they can involve significant downtime and potential data staleness, particularly in high-transaction environments. Compatibility between database versions is another critical consideration for this method.

Finally, the Import and Export techniques are commonly used for cross-platform migrations where data needs to move between different DBMSs or environments with differing formats. This approach allows granular control, enabling partial data migrations or data transformations during the process. It supports a wide range of formats such as CSV, JSON, or SQL dump files, making it highly flexible. However, Import and Export can be time-intensive for large datasets and requires careful handling of relational dependencies and potential data loss risks.

2. Online migration (aka. parallel migration)

Online migration is a phased approach to data migration that involves running both the source and target systems in parallel while incrementally transferring data over time. This method often starts with an initial data load, followed by continuous updates until the new system fully replaces the old one.

Online migration offers several advantages, including the ability to thoroughly test and validate the new system before a full cutover, balancing rapid migration with the flexibility to handle incremental updates. It minimizes risk by maintaining the old system until the new one is stable and operational.

However, this approach can be resource-intensive and costly, as running parallel systems requires careful synchronization to ensure data consistency. Online migration is particularly suitable for scenarios involving large datasets or mission-critical applications where downtime must be minimal or avoided altogether.

The Blue-Green migration strategy is an online migration approach that minimizes downtime and risk by maintaining two identical environments — one that is active (blue) and one that’s idle (green). Data is migrated and tested in the idle environment before switching traffic to it. This approach ensures uninterrupted service and provides an easy rollback mechanism in case of issues. The cost of maintaining duplicate environments and the effort involved in synchronization are significant challenges with this method.

There is also the Red-Black migration which is a derivation for the Blue-Green migration strategy. In this approach, two environments are maintained: the "Red" (active) environment and the "Black" (standby) environment. The target database is deployed to the Black environment while the Red environment remains live. Once the Black environment is tested and verified, traffic is switched from the Red environment to the Black environment. The only difference between this strategy and the Blue-Green, is that you don’t need to maintain both databases serving users at the same time.

Ultimately, CDC is an online migration method that captures changes in the source database and synchronizes them with the target system. Tools like Debezium, Oracle GoldenGate, and Striim facilitate this process, ensuring data consistency across environments.

3. Incremental migration

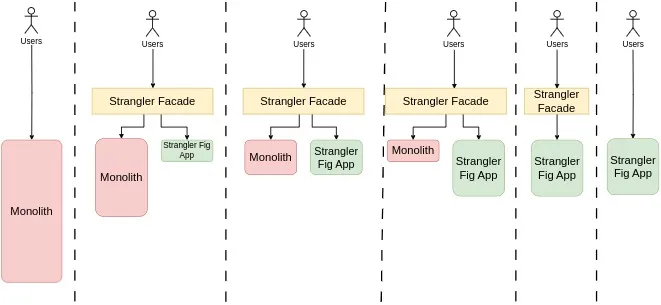

The Strangler Fig approach, also known as the phased rollout, migrates a portion of your users, workloads, or tables over time. Until all users, workloads, and/or tables are migrated, the application will continue to write to both databases.

This approach is inspired by the way a strangler fig plant slowly overtakes a tree, replacing it over time. In this context, it refers to the incremental migration of database components, allowing for the old database system to continue running alongside the new one until the transition is complete. This approach minimizes disruption by gradually shifting data and functionality to the new system, rather than doing a full migration in one go.

The Strangler Fig approach is particularly well-suited for large or complex databases where migrating everything at once would pose significant risks or cause major disruptions. By breaking the migration into smaller, manageable parts, this method makes it easier to handle complex systems incrementally.

It's also highly effective for legacy system modernization, enabling organizations to gradually transition from outdated systems to modern platforms without the need for downtime. This approach is ideal for mission-critical systems, where maintaining continuous operation is essential, as it allows for the migration to occur in stages while keeping the existing system fully functional, thus minimizing business impact.

The Strangler Fig approach offers several key advantages, making it an attractive option for database migration. One of the biggest benefits is minimal downtime, as the old system continues to operate throughout the migration process, reducing the disruption typically associated with moving to a new database.

It also mitigates risk by allowing for incremental migrations, which means potential issues can be identified and addressed in smaller, manageable sections before they impact the entire system. This method also provides flexibility, giving organizations the ability to spread migration efforts over time, which reduces pressure and allows for careful planning.

A transformative journey

Modernizing from mainframe to a distributed architecture is a journey fraught with challenges – but brimming with opportunities for transformation!

By following best practices – such as strategic planning, phased migration, robust monitoring, and team enablement – and learning from industry experiences, organizations can ensure a seamless transition. Ultimately, selecting the appropriate tools and techniques based on your organization's specific needs is critical to the success of the modernization effort.

Ready to embark on your data modernization journey? Learn how the right cloud-native distributed database will help: Visit here to speak with an expert.

Amine El Kouhen, Ph.D., is Sr. Partner Solutions Architect at Cockroach Labs.